Four Cost Effective Ways to Perform Early Life Cycle Validations in a Software Testing Project

Early life cycle validation is not a new concept in software testing space. It focuses on practices of testing team validating the key outcomes of upstream life cycle phases of software development and testing. But still many testing projects have failed to implement these practices in an effective way. It is primarily due to inconsistent and incorrect manner in which the implementation of these practices has been done. This point of view is based on author’s experiences and describes four most effective ways of implementing early life cycle validation practices in a testing project. This helps the project to achieve better productivity and application quality. It also aims at overall cost reduction of the project as the defects are caught and fixed during very early stages of a project.

Why “Early Lifecycle Validation” in software testing?

Gone are the days when software quality used to be inspected only towards the last phase of its life cycle i.e. say during system testing OR performance testing. Early Lifecycle Validation (ELV) is Or Shift left (as often termed as) a norm which is getting popular in all kinds of software testing projects. In this approach, the focus is on thorough validation of the deliverables of upstream life cycle processes by the testing team. The key benefits of ELV include

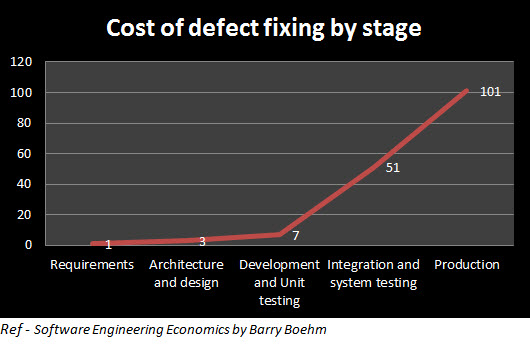

- Effort and cost to fix the defects is less as cost of fixing a defect rises exponentially with each Life Cycle stage

- Ensures best in class quality of the system as it ensures good input quality for every stage of the project

- Software Development Life Cycle (SDLC )

- Detecting defects early in the lifecycle saves in overall cycle time for the project and thus ensure better time to market

Key success criteria

The key success criteria for implementing early life cycle validation approach is –

- Collaborative approach – To perform ELV activities, it demands for a collaborative approach of having various relevant teams involved in it like – client stakeholders, business users, development team, architecture team, functional testing team, performance and other special testing teams, help documentation team etc. Every team needs to have a single vision of best in class application quality in optimal cycle time. They should be open to accept suggestions and issues to their work product which are pointed out by some other team members.

- Availability of appropriate skill sets to perform these activities – Key people who are going to perform these activities need to possess following skill sets

- Excellent domain and application knowledge

- Very good understanding on the IT technologies that are getting used in the project

- Customer willingness to invest on ELV activities – ELV does not recommend any cuts in any existing appraisal activities of SDLC like design reviews, code reviews etc. These are some additional activities that are suggested on and above the basic appraisal activities. Hence it is important to have client willingness to spend extra money on the effort and time needed to perform these activities. The expected ROI on these investments is huge as compared to the investment made to perform these activities.

In absence of any one of the points below, the overall approach for ELV will not be effective.

Various approaches of ELV

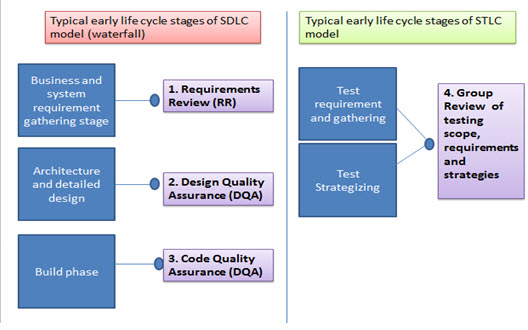

There are multiple approaches to perform ELV. But in my opinion, following are the top 4 approaches to implement ELV in the testing projects.

1. Requirements Review (RR)

The testing team can get started on this approach as soon as Business Requirements Document (BRD) and System Requirements Specification (SRS) are base lined by concerned stakeholders from client team/development teams. So before starting on subsequent SDLC phase i.e. design phases there should be a phase to perform thorough review of business and system requirements by testing team. Within QA team, there should be profiles available that can play effective role of “User Proxy” who can wear client’s hat to understand the essentials and details of the system from user’s perspective and hence can think of from user’s perspective. This user proxy should also have very good knowledge about the application under consideration. S/he should also possess skills on domain and overall industry trends. For instance if the system under consideration is for some legal compliance perspective, then the user proxy should be aware details around

- Complete knowledge on legal aspects for which the compliance system is made

- Industry trends like what other companies/competitors are doing to comply to the same

The user proxy can pick up some more members from the team and perform these activities in a group review mode. Both the requirement documents (BRD and SRS) are thoroughly reviewed from key 3 perspectives i.e. functional verification, structural verification and conformance verification.

Functional Verification – Team ensures that the business requirements give an accurate and complete representation of the business goals and user needs. The same are rightly translated in the form of system requirements. For ex – The team can come up with observations like ones mentioned below

| Comment | Implication/Objective |

|

BRD – “All past salary slip should be made available to employees”. |

Need to resolve this right away rather than getting this caught in testing OR production phases |

| SRS – Mention of financial requirement X is deviating from SOX compliance. Why? | Need to discuss and close this now otherwise may lead to penalty if this would have realized during production |

Structural Verification – ELV Team checks the requirements from structural perspective i.e. they check for ambiguities of expression, seek clarification, restate it in structured natural language (say English) and apply “Cause Effect Graphing” or “Decision Table” techniques for better and clear representation. They also consider some natural language related checklist and tools like ambiguity checker to verify the requirements against the pitfalls of natural language.

For ex -The team can come up with observations like

| Comment | Implication/Objective |

|

SRS – “If it is transaction x then update relevant corresponding data like EPF, the salary structure etc.” |

What is meaning of this “etc.”? Are any more records needed to be updated? We cannot leave this to interpretation of an individual. |

| SRS – “Accounts are reviewed for discrepancies at the appropriate time” | Need to explicitly and clearly mention of what the appropriate time is. |

Conformance Verification – ELVTeam will ensure conformance to requirements management processes in terms of Requirement Traceability Matrix (RTM), requirement prioritization, requirement change procedure, process adherence etc. So the team can come up with observations like

| Comment | Implication/Objective |

|

Check RTM and review the traceability from BRS to SRS. For ex. BRS talk about 5 policies but SRS mentioned about only 4 policies. |

Need to clarify and sort out the exact number of policies to be implemented |

| Check if there is any process defined for Requirement Change Requests (RCR). | Primarily to check if is it adequate? Does QA team get any role to play in the RCR management process? When will QA team come to know for any RCR? |

All these observations should be discussed with relevant teams and stakeholders. Also we need to ensure that both business and system requirements documents are updated as per the observations.

Thus this step ensures that the requirements are clear, unambiguous and complete.

2. Design Quality Assurance (DQA) – applicable for both high level and low level design

If the thorough “Requirements Review” phase is carried out then it is assured that the design & architecture team is getting clear requirements on which they need to create design i.e. system architecture (high level design OR HLD) and low level designs (LLD). Once the high level design OR architecture phase is over then the ELV team again comes together to perform design quality assurance i.e. DQA activities. Here it is expected that the ELV team possesses good working knowledge of technology, databases, reporting tools etc. The team can take following approach for DQA

- Based on business/system requirements, come up with the list of top requirements and priorities for which we need to verify the system architecture

- The architecture team explains the overall architecture of the system to the ELV team

- ELV team audits the design to verify that the architecture will provide the intended business requirements of the system.

- Some sample design audit questions /observations can be

| Comment | Implication/Objective |

|

In the interfacing applications, MyApp should pull in data from 7 databases and 5 systems? But architecture diagram shows only 7 databases and 4 systems. |

Check for that and ensure that provision for data pull from all the relevant interfaces. |

| In the architecture diagram, there is no component shown for “Reporting”. But there is a separate tab for reporting in the SRS. | Is this a genuine miss OR represented the same in a different way? |

All such observations should be discussed with relevant teams and stakeholders and ensured the high level design and architecture documents are updated as per the observations.

Thus this step ensures that the high level design and architecture caters to the technical needs of the business and system requirements.

Similar approach can be taken once the detailed design phase is over. In this case the ELV team can plan to do audit of low level design on sample basis. So they will request architecture team to walk them through the detail design of some critical functionality. Some sample audit questions can be

| Comment | Implication/Objective |

|

Security aspect – For banking information – Are you taking extra login to retrieve this information for user? |

To check security implementation |

| Trigger based activities – For ex – Email on trading window closure on respective dates–– How are they implemented? From where dates are getting picked up? | To validate trigger based approach |

All such observations should be discussed with relevant teams and stakeholders and ensured that the corresponding design documents are updated as per the observations.

Thus this step ensures that the low level design and architecture caters to the technical needs of the business and system requirements.

3. Code Quality Assurance (CQA)

If the “Design Quality Assurance” phase is carried out, it is assured that build team is getting clear design on which code needs to be created. Once the build phase is over then the ELV team again gathers to perform code quality assurance i.e. CQA activities. Here it is expected that the ELV team possesses working knowledge of technology, databases, reporting tools etc. The team can take following approach for CQA

- Based on business requirements, come up with the list of top requirements and priorities for which we need to verify the implementation in code

- The development team walks ELV team through the key code implementations for the critical functionality and features that the ELV team wants to audit.

- ELV team audits the code to verify that it will provide the intended business requirements of the system.

- ELV team can also use some Product Quality Analyzer tools which can analyze the code in the statistical way to check the structural quality of the code. Such tools can create detailed report on the health of code from various aspects like maintainability, performance best practices, security related best practices, if the code is written in optimized way etc.

- Some sample audit questions for CQA can be

| Comment | Implication/Objective |

|

If requirement traceability matrix is complete till coding phase for all BRS requirements |

To check the traceability |

| Coding standards for the underlying language are followed or not? Some evidence? Some filled in checklists? | Assures consistency and best practices of coding are there in the artifacts |

| Explain the critical algorithm and logic of income tax calculation. | Try to understand basic logic of a key feature |

All such observations should be discussed with relevant teams and stakeholders and ensured that the observations are implemented back in the code.

Thus this step ensures that the build phase considers all important aspects of business needs for the system.

4. Group Review of testing scope, requirements and strategies

In the above 3 processes, we saw that QA team reviews the deliverables from upstream processes of SDLC i.e. requirements, design and coding. In this practice, the upstream phase outcomes of STLC are validated by other concerned stakeholders. This is a very simple but powerful step that we can take to ensure that the testing scope, test requirements and test strategies outlined for the system are adequate and in alignment with the desired outcome from the system. We can use the group review approach for the same. All stakeholders from relevant subgroups can perform this activity. QA lead can coordinate this activity. All the relevant docs which capture the test scope, test requirements, test strategies and approach should be reviewed to check

- If the testing is going to be adequate

- Any obvious issues that these groups can see in the testing approach

- Choice of test strategies

- Correct understanding of test scope and test requirements

Sample audit questions for such group review can be

| Comment | Implication/Objective |

|

The chosen tool by QA for test automation is “X” and technique for automation is “data driven”. But the tool should be “y” and technique chosen should be “keyword driven” |

This is input from one of the group review members based on her experience in similar other project wherein they faced issues in tool X and “data driven” methodology. If this Fagan inspection has not happened, this team would also have faced similar issues with their choice |

With this review, the possible issues and hindrance will come out and QA team will have a chance to correct these things in the very beginning of the STLC phase rather than these issues traversing through various STLC phases and discovering at a very late point in time. Also involvement of every individual subgroup ensures that there is enough and correct testing approach for each part of the system.

Conclusion

As we have seen that software quality can be dramatically improved by adopting these simple but powerful techniques mentioned above and as summarized in the diagram below.

We can catch these defects at much earlier stage of SDLC OT STLC rather than catching them towards fag end of the process. We have also seen the sample ROI of performing these activities. There has to be a collaborative approach adopted by all the concerned stakeholders to make it effective. We have seen its benefits in project that implemented them in our organization. These benefits from the select case studies are as below

- Improved test case preparation and execution productivity in the range of 19-34%

- Defects detected using ELV techniques – ranging from 600-750 defects (which otherwise would have got detected only at the time of QA)

- Cost of quality saving in the range of 300 K USD to 3. 4 M USD

- About 99-99.8% of testing effectiveness ensuring extremely high quality of product/application when it reaches UAT/Production stag

Effort and cost incurred to conduct these activities is exceptionally low and was in the range of 10-40 K USD thus fetching us very high returns on the investment

Hence these methods are observed as most cost effective methods of early life cycle validation in case of testing assignments.

Don’t forget to leave your comments below.

References

- Infosys validation methodology