The Philosophical Data Analyst (Part 2): The Problem of Induction

Most organizations understand data is an asset, providing a rich resource that can be analyzed to unearth predictive insights. Many organizations invest heavily in their data operations to ensure the ongoing completeness, integrity, and accuracy of collected data. However, regardless of how complete, correct, and/or unbiased collected data may be, there are limits as to what insights can be gleaned from a given dataset. Acting on analytical insights outside these limits introduces risk.

This article describes the problem of induction – assuming you know more than you do – in the context of predictive analytics. It describes how the problem can be exacerbated by seemingly improbable or unlikely events. Finally, it outlines how PESTLE can be used to explore the limitations of the analysis, allowing Business Data Analysts to assess and mitigate risks.

Advertisement

The Problem of Induction

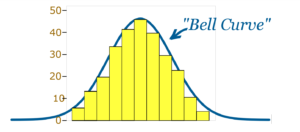

The Problem of Induction is not new. It has been explored by philosophers since at least the 18th century (Henderson, 2018). Simplistically, the problem is the illusion of understanding – when people think they know what is going on when the situation is more complicated (or random) than they realize (Taleb, pg. 8).

In his book The Black Swan, Nassim Nicholas Taleb uses the plight of a Thanksgiving turkey to illustrate the problem (Taleb, pgs. 40,41). Assume a given turkey is fed every morning for 1000 days. On the first day, being fed is probably a welcome surprise to the turkey. After a few days, the turkey will start to notice a pattern and become more confident that it will be fed the following day. Over the course of the 1000 days, the turkey’s level of confidence will grow to the point that they expect – indeed are quite certain – that they will be fed the following day.

However, on day 1000, the turkey is not fed and is instead slaughtered – an event that would seem completely unpredictable (a black swan) to the turkey given its experience over the prior 1000 days. Of course, if the turkey had known about the tradition of thanksgiving, it may have been able to factor this into its predictions. Alas, this information was outside the realms of the turkey’s knowledge and experience.

Black swans are used by Taleb to describe events that seem improbable and/or unfathomable. Before the “discovery” of Australia, the idea that a swan could be anything other than white seemed preposterous to Europeans. However, a single sighting of a black swan by European settlers was enough to invalidate the understanding of an entire continent (although for the local Whadjuk Noogar people*, the idea of a swan being anything but black would have been equally preposterous – perhaps making the coming of European settlers their white swan event?)

The year 2020 provided us with an example of a black swan that no doubt exacerbated the problem of induction for many organizations. Most organizations failed to foresee the rise of COVID and its impact simply because it was outside their lived experience – a black swan. Neither its occurrence nor impact could be reliably inferred from the information they had – just as the turkey could not foresee its demise. As such, predictions for 2020 based on historical information were unlikely to be accurate.

Note that this does not mean the pandemic and its impact could not be predicted to some degree. In the same way, the Whadjuk Noogar have always known swans could be black, health and virology experts have been publicly predicting and even planning for a pandemic for some time (see Rosling 2018, pgs. 237-238 and NHS England, 2013). In addition, available analysis from previous outbreaks of disease, such as SARS and Swine Flu, could have provided some insights into the impact of a pandemic (see Smith et. al. 2009, Australian Government Treasury 2007). However, until 2020, a pandemic was not part of the lived experience for the vast majority of organizations. Therefore, it was unlikely to be factored into their analysis and planning.

Containing the Problem of Induction: Know Your Limits

In the context of predictive data analytics, the problem of induction is often exacerbated by:

- Assumed Continuity – when analysis implicitly assumes that the conditions under which data were collected and analyzed will be sustained. An organization may “believe they are thinking about the future, but they are usually just extrapolating the present, and that’s not the same thing at all.” (Lovelock, 2020).

- Information Blind Spot – this is where information is not considered in or has been omitted from the analysis.

Relying on predictive analytics without understanding its limitations can lead to a false level of confidence in predictions. This may mean organizations continue to ‘trust’ analysis beyond the point of reliability, taking longer to respond to changes in conditions. (It worked before; it should work now!)

Techniques such as PESTLE can provide an effective frame for exploring the limits of predictive analysis. By assessing the reliability of analysis under different scenarios, Business Data Analysts can understand and communicate limitations. The table below uses PESTLE to help identify some high-level scenarios to explore.

|

Political |

Change in government; Market intervention (think quantitative easing); Legislative changes; Change in political stability (think Arab Spring); Act of terrorism (think 9/11); War; |

|

Economic |

Interest rate change; Cost-of-living changes; Global trade and/or supply chain issues; Recession or economic shock; |

|

Social |

Health care or housing availability crisis; Social movement (think Me Too, Black Lives Matters); Mass migration (think Syrian Civil War); Epidemic/Pandemic; |

|

Technological |

ICT security incident (think ransomware); Disruptive technology (think Uber, Air BnB); Scientific discovery/scrutiny (think smoking and cancer); Severe defect/breakdown (think Boeing 737 MAX); |

|

Legal |

New regulations/de-regulation; Employee malpractice; Legal scrutiny; |

|

Environmental |

Infrastructure outage; Natural disaster; Man-made disaster; |

The technique can be used to identify more detailed scenarios that are applicable to a given organization. The idea is to think of a range of scenarios, from the probable to the seemingly improbable. Once identified, you can assess if analytical outputs are likely to be valid under each of the scenarios, thus identifying the ‘bounds’ within which the analysis can be applied. For example, you may deem that analytical outputs would continue to be reliable in the event of a democratic change of government, but not in the event of a military coup.

Once the limits of analysis are identified, steps can be taken to:

- Identify additional information sources that may be used to strengthen analysis

- Identify types of events that should trigger a review of analytical models, measures, and outputs

- Identify and mitigate any risks posed by the scenario

- Inform decision-makers of the limitations of the analysis so that it may be factored into decision-making.

Conclusion

Predictive analytics can provide useful insights to support decision-making. However, the conditions under which data is collected and analyzed naturally limit the situations under which insights should be applied. Understanding these limits can prevent analytical results from being relied on in circumstances outside of these bounds.

At the end, it’s better to have some understanding of what you don’t know than to think you know what you don’t.

*The author acknowledges the Whadjuk Noogar people, the traditional custodians of the Derbarl Yerrigan (or the Swan River), and pays her respects to elders’ past, present, and emerging.

References:

- Taleb, Nassim Nicholas, The Black Swan: The Impact of the Highly Improbable, Random House, 2007.

- Rosling H., Rosling O., Rosling Ronnlund A., Factfulness: Ten reasons we’re wrong about the world – and why things are better than you think, Flatiron Books, 2018.

- Henderson, Leah, The Problem of Induction, Stanford Encyclopedia of Philosophy, March 2028. (Last Accessed January 2022).

- Lovelock, Christina, The Power of Scenario Planning, BA Times, July 2020. (Last Accessed January 2022).

- Operating Framework for Managing the Response to Pandemic Influenza, NHS England, (Last Accessed January 2022).

- The economic impact of Severe Acute Respiratory Syndrome (SARS), The Australian Government Treasury, 2007. (Last Accessed January 2022).

- Smith, Keogh-Brown, Barnett, Tait,The economy-wide impact of pandemic influenza on the UK: a computable general equilibrium modelling experiment, The BMJ, 2009. (Last Accessed January 2022).