6 Things Every Business Analyst Should Know About the World of Data

You must have heard of Big Data, Data Science, Business Intelligence, Data Driven. Maybe not. What do all these cool words mean for a Business Analyst?

Is there any difference between the Business Analyst and Data Scientist?

1. Definition of Big Data, Data Science, Business Intelligence, & Data Driven

Let’s define each of these concepts

- Big Data – Big data is high volume, high velocity, and/or high variety information assets that require new forms of processing to enable enhanced decision making, insight discovery, and process optimization. (Ref1-Gartner’s definition)

- Data Science – It is a concept to unify statistics, data analysis, and their related methods to understand and analyze actual phenomena. (Ref2- Hayashi, Chiko)

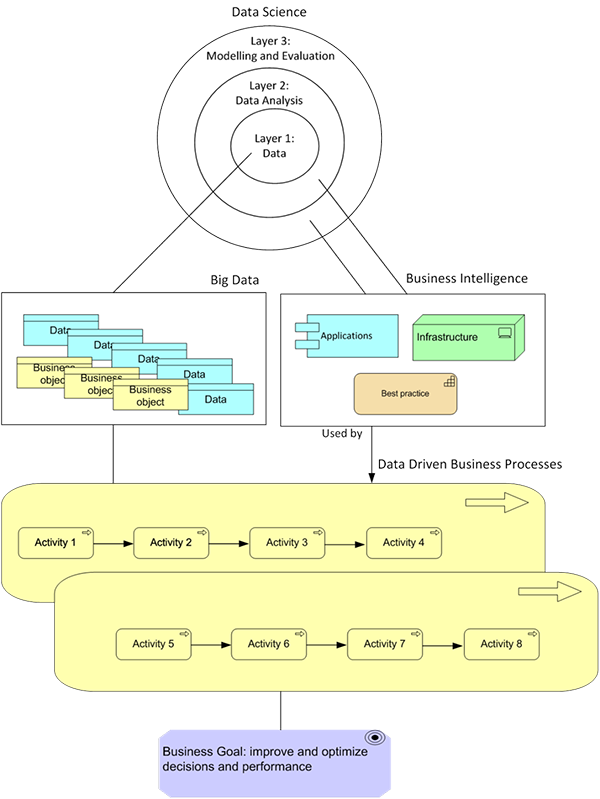

Data Science can include the elements of the following 3 layers:

Layer 1 – Data

Layer 2 – Data Analysis

Layer 3 – Modeling & Evaluation - Business Intelligence – Business intelligence (BI) is an umbrella term that includes the applications, infrastructure and tools, and best practices that enable access to and analysis of information to improve and optimize decisions and performance. (Ref3- Gartner’s definition)

- Data Driven – It means that the progress in an activity is compelled by data or decision making is driven by specific data points or results.

2. Concept Relationships

Now that we have defined these concepts, let’s look at the relationships between each concept. Below explains how each concept relates to each other.

- Big Data and Data Science: Layer 1 Data of the Data Science could be Big Data if Data has 3-V characteristics: high volume, high velocity, and high variety.

- Business Intelligence and Data Science: Business Intelligence is the implementation of Layer 2 and 3 in the concept of Data Science.

- Data-Driven Processes and Data Science: The Big Data is created by the Business Processes. The Business Intelligence will serve the Business Processes to improve the decision making and performance.

The diagram below illustrates the relations between the concepts further:

3. The Business Analyst and Data Science

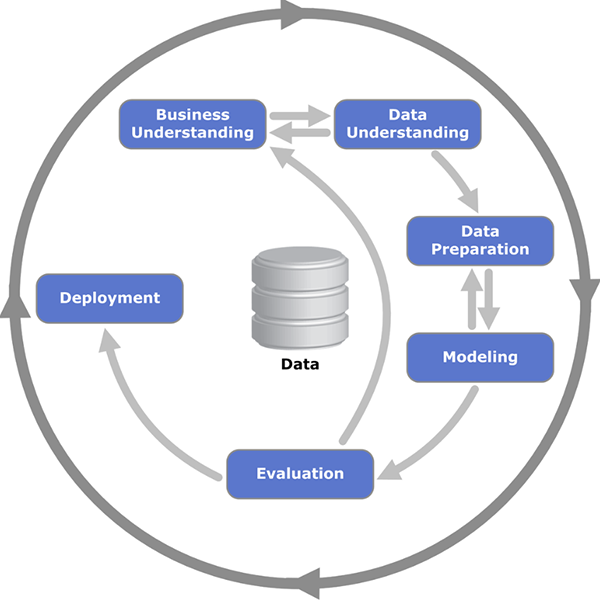

Data Science is intended to create and define the Business Goal and is presented in the most popular CRISP-DM approach (Ref4- Shearer C., The CRISP-DM model shown below).

A key part of Strategic Enterprise analysis as outlined in the BABOK (Chapter 6, Page 99) is the creation of a business goal or vision. Business goals and visions are elaborated and defined clearly based on many factors including a data driven approach. Having clear business goals and visions creates a more solid foundation in which programs and projects can be based. Does your project charter contain the data driven results to support the need for the project? A key part of that business case is the data to support the investment.

The first two steps in CRISP-DM requires the good understanding of both Business Process and Data Needs, which is similar to Phase-B and C of the TOGAF circle. Which role can be competent enough for taking care of the first two steps and bridging them seamlessly? I would argue that the Business Analyst is a good candidate for this role as they have the specific skill sets to perform the work of creating specific business goals and visions. In today’s world, the BA typically works just at the project level and rarely gets the opportunity to formulate the business vision and goals. A Business Analyst can then take the vision and goals by using data science to build data sets that support an organization’s vision and goals.

Mckinsey’s report “The age of analytics: competing in a data-driven world” mentions the following “Many organizations focus on the need for data scientists, assuming their presence alone will enable an analytics transformation. But another equally vital role is that of the business translator who serves as the link between analytical talent and practical applications to business questions. In addition to being data-savvy, business’s translators need to have deep organizational knowledge and industry or functional expertise.”

Moreover, the Business Analysts will walk with the “Data Scientists” or any “Business Intelligence Specialists” or “Database Developers” through the whole Data Transformation cycle “Data Collection- Data Preparation/Aggregation – Data Analytics- Deployment.” This team-up is absolutely necessary for ensuring that the deliverable of Data Analytics will meet the Business needs and deliver the associated Business benefits. This is also the Business goal of Data-Driven thing.

Data science can be used at different points:

- Can be used to create business vision, goals and objectives – creates a need for further Strategic Enterprise analysis or to build a business case for a program or project

- Can be used to support a project charter or business case – shows the projected value of the project in terms of data, the potential investment needed and the potential Return on Investment.

- Can be used throughout the project – creates desired state measurements for the project to achieve by further elaborating on data points outlined in the project charter, creates metrics for that can be used to guide the project from initiation to implementation, and finally reports to the project team objectively on how well the project solution design will meet the desired state and business goals.

4. The Data Driven World and TOGAF

TOGAF expresses the Continuous Improvement Concept with a Top-down approach. Data-Driven tries to express the Continuous Improvement with a Bottom-up approach. These two do not have any conflict with each other because Data comes from Business Processes, and the input or output of the Business Processes are in the end presented in the form of Data. TOGAF and Data-Driven approaches meet in the middle.

Such a meeting in the middle can cause a train wreck if both sides are focused on a similar business vision or goals. It’s important to consider the data and its meaning. Two groups can get the same data but draw very different responses. Clear definition of the data points and terms is important to ensure TOGAF and the data-driven world work well with each other.

We can say the Enterprise Architecture is Data Driven. It’s difficult and almost impossible to build the architecture for an organization without at least understanding it’s data. Data Science comes into the picture to fill the gap. Data Science can relate their understanding of business data directly to business vision and goals. Enterprise Architecture needs to understand the business vision and goals clearly to create the environments needed to support the business more effectively.

5. A Fool with a Tool is Still a Fool

Data-Driven does not mean you require a fancy Business Intelligence Tool or vast infrastructure of database warehousing. It means you will need easy access to high-quality data to perform queries, extracts, and analysis. Complex tools may or may not be the answer. Choose your tools carefully to make sure they are meeting your needs.

6. Challenge the Data

Data-Driven means data is challenged. Is this data valid? Is the data of high quality? Should this data be used for decision making? To make a good business decision on data, you must challenge it’s meaning and quality routinely. Don’t take that data set result at face value. Perform data analysis and validate it. When making assumptions, it is important to define those assumptions and communicate assumptions with the data clearly.