Why Cyber And Physical Security Needs To Be Top Of Mind For Business Analysts

Cybercrime costs organizations $2.9 million every minute and costs major businesses $25 per minute due to data breaches.

It is no secret that cybersecurity is a top priority for all businesses, especially those adopting emerging cloud based and IoT technologies.

Cyber and physical security are becoming increasingly linked concepts, and business analysts must be prepared to include physical and digital security in any project they oversee. Keep reading to understand how you can implement a physical and digital converged security strategy in your business.

Why Business Analysts Should Have Cybersecurity Knowledge

Cybersecurity is part of risk management and should be included in every project your company oversees.

Since cloud-based technologies are included more in physical security strategies and are necessary to support hybrid working strategies, business analysts need to understand cybersecurity better.

The business analyst is the technical liaison between the project manager and the technical lead. The business analyst must be able to straddle both worlds of project management and the technical side of the operation.

By ensuring a thorough knowledge of cyber and physical security, the business analyst can improve communications and create swifter operating procedures. Business analysis isn’t just common sense and requires knowledge of key aspects within a business’ infrastructure.

Merging cyber and physical security is necessary to meet modern security demands, as the internet of things (IoT) and cloud-based technologies are making businesses more exposed to hacking. The data analysts need to evaluate is hosted on cloud-based platforms that require protection to prevent a breach.

How To Implement Better Cybersecurity Practices

Here are some of the best tips for business analysts to modernize their security strategy to handle physical and digital security threats. Better cybersecurity means more trust from stakeholders and protection from potential losses caused by the exposure of sensitive data.

Advertisement

Merging Physical And Digital Security

With the increased adoption of the internet of things (IoT) and cloud-based technologies, a restructuring is required within the facets of your business’ security staff. Housing digital and physical security teams separately can make modern security threats increasingly challenging to handle effectively.

With assets becoming both physical and digital, your physical security staff and IT team may have difficulty determining which security elements fall under their jurisdiction. By merging both teams, you can improve their communication and create a physical and digital security strategy that allows for faster response to physical and digital security threats.

You can integrate cybersecurity software with physical security technologies to prevent unauthorized users from accessing critical security data. You will modernize your cloud-based security system and make it impervious to physical and digital security breaches.

Using Physical Access Control To Protect Digital Assets

Your office building is home to many digital assets that store sensitive data. If an unauthorized user gains access to your physical servers, your data can be vulnerable. However, you can install door locks that prevent unauthorized users from entering your building by installing access control technology.

Mobile credentials can be used for access control security, which has many benefits. Users will be able to download an app and receive their mobile access key, rather than waiting for a physical key or keycard to be given to them. Bluetooth access readers can detect mobile devices stored in pockets and bags, meaning that your employees can enter the building without even removing their device and presenting it to the reader. Smart door locks can enhance your security and increase the convenience of your employees.

Using A Zero-Trust Security Strategy

A zero-trust security strategy does not assume the trustworthiness of employees and building visitors. Zero-trust can be applied to both physical and digital security strategies to remove the possibility of an internal security breach.

Access control door locks can be installed internally in your building to ensure that permissions to areas containing sensitive data and company assets are only granted to users that require access. The same principle can be applied to your cybersecurity strategy, only giving users permissions to access the data they need to perform daily operations.

A zero-trust security strategy is essential to eliminate the risk of an internal security breach that could cost your business money and lose your stakeholders’ trust.

Cybersecurity Training

Data is more vulnerable in a hybrid or remote work model, which means your employees should receive training on keeping their devices and networks protected. You can start by providing basic cybersecurity training covering the following topics:

- How to avoid phishing scams.

- How to set strong passwords.

- How employees can keep their device software up to date to avoid vulnerabilities.

By providing your employees with basic cybersecurity training, you can significantly reduce the likelihood of a cybersecurity breach caused by human error.

Summary

Business analysts face new challenges when it comes to overseeing projects that are sufficiently protected in terms of both the physical and digital. A converged security strategy can combine physical and digital security strengths and help to futureproof your business against the changing nature of security threats.

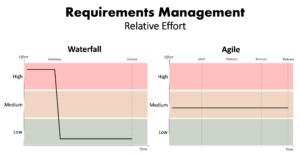

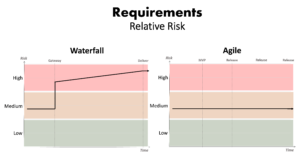

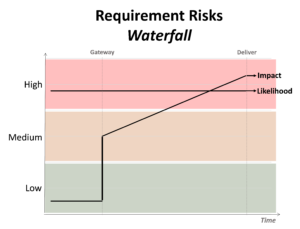

Figure 2 – Waterfall vs. Agile: Relative risk posed by ill-defined requirements over time

Figure 2 – Waterfall vs. Agile: Relative risk posed by ill-defined requirements over time

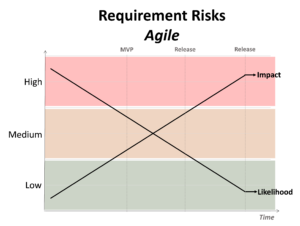

Figure 4 – Agile: Likelihood and impact of ill-defined requirements over time

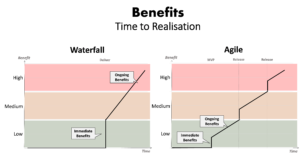

Figure 4 – Agile: Likelihood and impact of ill-defined requirements over time Figure 5 – Waterfall vs. Agile: Relative time to the realization of benefits

Figure 5 – Waterfall vs. Agile: Relative time to the realization of benefits