Tackling Updates to Legacy Applications

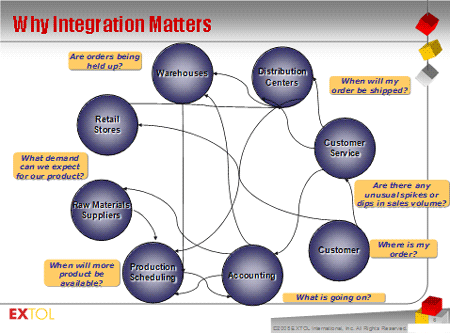

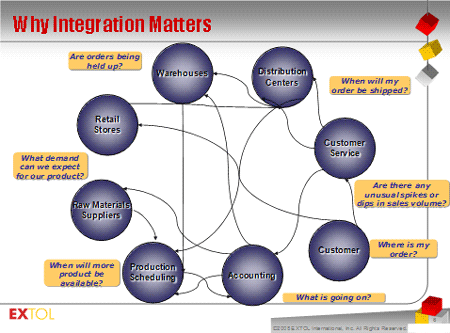

After 40 years of serious business software development, the issues involved in dealing with legacy systems are almost commonplace. Legacy applications present an awkward problem that most companies approach with care, both because the systems themselves can be something of a black box, and because any updates can have unintended consequences. Often laced with obsolete code that can be decades old, legacy applications nonetheless form the backbone of many newer applications that are critical to a business’s success. As a result, many companies opt to continue with incremental updates to legacy applications, rather than discarding the application and starting anew.

And yet, for businesses to remain efficient and responsive to customers and developing markets, updates to these systems must be timely, predictable, reliable, low-risk and done at a reasonable cost.

Recent studies on the topic show that the most common initiatives when dealing with legacy systems today are to add new functionality, redesign the user interface, or replace the system outright. Since delving into the unknown is always risky, most IT professionals attempt to do this work in as non-invasive a manner as possible through a process called “wrapping” the application, which is an approach to keeping the unknown as ‘contained’ as possible and interacting with it through a minimal, well-defined, and (hopefully) well-tested layer of software.

In all cases, the more a company understands about the application – or at least the portions that are going to be operated on – the less risky the operation becomes. This means not only unraveling how the application was first implemented (design), but also what it was supposed to do (features). This is essential if support for these features is to continue, or if they are to be extended or adjusted.

Updating Legacy Applications: A Formidable Task

What characterizes legacy applications is that the information relating to implementation and features isn’t complete, accurate, current, or in one place. Often it is missing altogether. Worse still, the documentation that does exist is often riddled with information from previous versions of the application that is no longer relevant and therefore misleading.

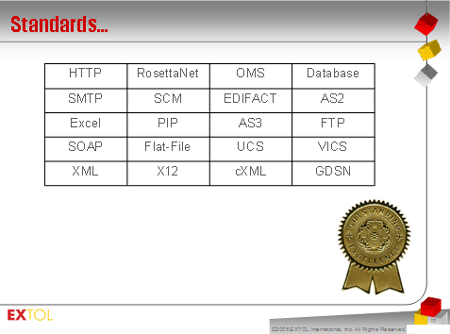

Other problems can plague legacy development, including the fact that the original designers often aren’t around; many of the changes made over the years haven’t been adequately documented; the application is based on older technologies – languages, middleware, interfaces, etc. – and the skill sets needed to work with these older technologies are no longer available.

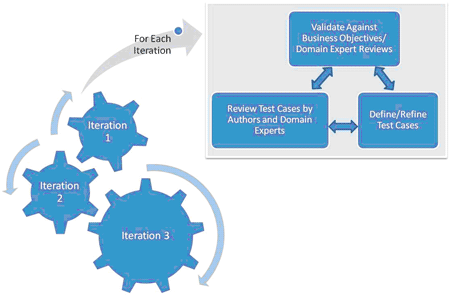

Nonetheless, it is possible to minimize the risk of revising legacy applications by applying a methodical approach. Here are some steps to successful legacy updating:

Gather accurate information. The skills of a forensic detective are required to gain an understanding of a legacy application’s implementation and its purpose. This understanding is essential to reducing risk and to making development feasible. Understanding is achieved by identifying the possible sources of information, prioritizing them, filtering the relevant from the irrelevant, and piecing together a jigsaw puzzle that lays out the evolution of the application as it has grown and changed over time. This understanding then provides the basis for moving forward with the needed development.

In addition to the application and its source code, there are usually many other sources for background information, including user documentation and training materials, the users, regression test sets, execution traces, models or prototypes created for past development, old requirements specifications, contracts, and personal notes.

Certain sources can be better resources for providing the different types of information sought. For example, observing users of the system can be good for identifying the core functions but poor at finding infrequently used functions and the back-end data processing that’s being performed. Conversely, studying the source code is a good way to understand the data processing and algorithms being used. Together, these two techniques can help piece together the system’s features and what they are intended to accomplish. The downside is that these techniques are poor at identifying non-user-oriented functions.

The majority of tools whose purpose is to help with legacy application development have tended to focus on one source. Source code analyzers parse and analyze the source code and data stores in order to produce metrics and graphically depict the application’s structure from different views. Another group of tools focuses on monitoring transactions at interfaces in order to deduce the application’s behavior.

Adopt the appropriate mindset. While this information is useful, it usually provides a small portion of the information needed to significantly reduce the risk associated with legacy application development. A key pitfall of many development projects is not recognizing that there are two main “domains” in software development efforts: the “Problem Domain” and the “Solution Domain.”

Business clients and end users tend to think and talk in the Problem Domain where the focus is on features, while IT professionals tend to think and talk in the Solution Domain where the focus is on the products of development. Source code analysis and transaction monitoring tools focus only on the Solution Domain. In other words, they’re focused more on understanding how the legacy system was built rather than what it is intended to accomplish and why.

More recent and innovative solutions can handle the wide variety of sources required to develop a useful understanding and can extend this understanding from the Solution Domain up into the Problem Domain. This helps users understand a product’s features and allows them to map these features to business needs. It is like reconstructing an aircraft from its pieces following a crash in order to understand what happened.

Pull the puzzle together. The most advanced tools allow companies to create a model of the legacy application from the various pieces of information that the user has been able to gather. The model, or even portions of it, can be simulated to let the user and others analyze and validate that the legacy application has been represented correctly. This model then provides a basis for moving forward with enhancements or replacement.

The models created by these modern tools are a representation of (usually a portion of) the legacy application. In essence, the knowledge that was “trapped” in the legacy application has been extracted and represented in a model that can be manipulated to demonstrate the proposed changes to the application. The models will also allow for validation that any new development to the legacy application will support the new business need before an organization commits money and time in development.

Once the decision is made to proceed, many tools can generate the artifacts needed to build and test the application. Tools exist today that can generate complete workflow diagrams, simulations/prototypes, requirements, activity diagrams, documentation, and a complete set of well-formed tests automatically from the information gathered above.

Legacy Applications: Will They Become a Thing of the Past?

Current trends toward new software delivery models also show promise in alleviating many of the current problems with legacy applications. Traditional software delivery models require customers to purchase perpetual licenses and host the software in-house. Upgrades were significant events with expensive logistics required to “certify” new releases, to upgrade all user installations, to convert datasets to the new version, and to train users on all the new and changed features. As a result, upgrades did not happen very often, maybe once a year at most.

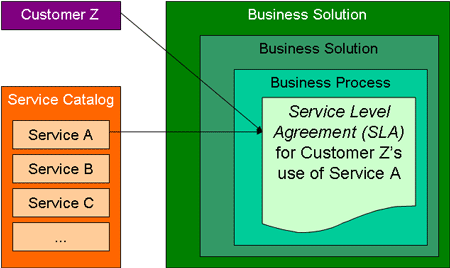

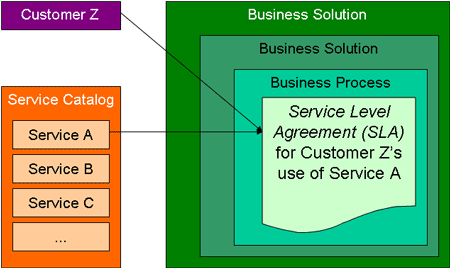

Software delivery models are evolving, however. Popular in some markets, like customer relationship management (CRM), Software as a Service (SaaS) allows users to subscribe to a service that is delivered online. The customer does not deal with issues of certification, installation and data conversion. In this model, the provider upgrades the application almost on a continual basis, often without the users even realizing it. The application seemingly just evolves in sync with the business and, hopefully, the issue of legacy applications will become a curious chapter in the history of computing.

Tony Higgins is Vice-President of products at Blueprint. He can be reached at [email protected].