In today’s economic environment, business organizations are demanding focused attention to fiscal discipline. IT organizations are finding themselves asked to support in-production applications on flat budgets, and new development is largely being approved only by the rule of efficiencies. Software applications are the focal point of improving efficiencies, as consolidation and integration projects can both reduce support costs of multiple siloed applications and streamline business processes for end users.

In this effort to do more with less, IT software groups are turning to outsourcing in record numbers in 2009. According to IT World, the economic collapse of 2009 has accelerated the use of outsourcers for software projects to record levels 1.

With CIOs turning to outsourcing as a strategic imperative to increase efficiencies for software projects, new challenges are being introduced that threaten the same efficiencies CIOs are moving to achieve.

By definition, outsourcing introduces third-party goods and services to augment capacity and capabilities. Since IT software has mission-critical implications, such third-party influence places a new burden on the business to ensure that these outsourced teams are properly goal-oriented, properly instructed, and properly managed to ensure productivity.

While there are many areas that can be influenced to ensure outsourcer success, there has been study after study that indicate the true control point for IT software projects is application requirements definition.

What follows explores industry, analyst, and customer recommendations on how to focus on requirements to ensure application development accuracy and to control risks so that the IT organization can turn those efficiencies into increased horse-power and lower operational costs.

Requirements Communication: A Challenge for IT Project Teams

The quality of requirements communication is a significant challenge for IT project teams, whether they are co-located or distributed. In the Software Development Lifecycle, the time dedicated to requirements definition has largely been consumed at

the early stage of the lifecycle, and it has involved dozens of subject matter experts who typically carry the title of business analysts or business systems analysts.

However, recent studies indicate that while business analysts do consume up to 10% of the project budget documenting requirements specifications 2, the result of their effort is typically in the form of difficult-to-understand paper-based documents. These paper-based documents are largely consumed by IT project teams, who must work to understand the intent of the author and translate the business need into detailed specification documentation.

Even in IT projects which largely consist of in-house development teams, i.e. not outsourced, the resulting rework and waste has been measurable. IAG tells us that typical waste and rework levels of poor requirements trace directly to upwards of 40% of budget consumption 3.

As IT organizations move to embrace outsourced teams as an extension of IT software project teams, the challenge of communicating requirements is exacerbated. MetaGroup tells us that over 50% of organizations that leverage outsourced teams have critical business-application knowledge in the minds of in-house developers that have been disenfranchised by the outsourced labor pool 4. As a result of this loss of subject matter expertise, outsourced IT organizations increase their dependence on the customer to

produce highly precise and specific requirements documentation. MetaGroup also tells us that turnover rates in outsourced service providers run at an average of 15-20%, resulting in a likely chance that specific talent assigned to your project will experience turn-over during the project cycle. This continues to reinforce the need for easily referenceable and consumable requirements direction. Experienced outsourcing customers and industry analysts have identified the appropriate focus areas to ensure IT project teams’ success when deploying outsourcing. While there are many areas that can impact the success of an IT team that has moved to leverage outsourced teams, there are a select few that dramatically improve success. IDC’s recent report on control points to outsourcing success helped draw focus to the most important areas.

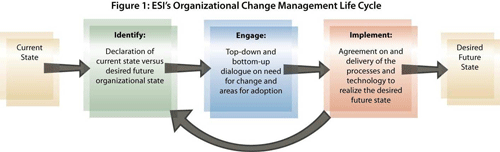

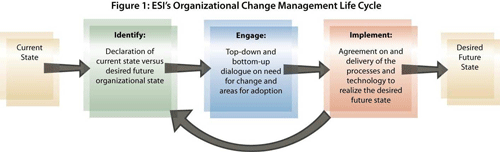

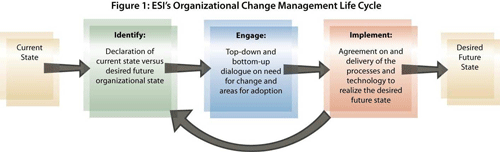

IDC continues to articulate the control points that ensuring outsourcing is an opportunity for efficiency and not a threat to efficiency involve the strategic touch-points to the outsourced team. IDC documents these touch-points to be requirements definition, quality assurance, and in-flight project collaboration 5 Other analysts such as Gartner, voke, and Forrester all have offered supporting research, which point to these same control points.

Controlling the Control Points

As mentioned earlier, there is a generally accepted principal of the importance of requirements, quality assurance, and collaboration when aligning outsourced teams. However, when IT arranges the control points in relation to one another, the logical focus priority of requirements is revealed. As you can see in Figure A, the quality assurance and project collaboration control points are directly impacted by the depth, quality, and understanding of the project Requirements Definition phase. In fact, rarely is a single quality assurance test scenario not directly based on (and traced back to) functional or non-functional requirements. A modern quality assurance trend is a move toward test-based-development, a trend that is accelerating with outsourced teams. Test-based-development builds a one-for-one relationship between test cases and requirements, where literally a test case can function as a requirement asset. In addition, collaboration with development is often directly linked to the implementation of a business requirement, or how the software influences that requirement.

Figure A: Relationship with Requirements to QA and Collaboration

By directing focus on improving requirements definition, IT project teams that leverage outsourcing groups can better manage all control points, and thus improve the impact and focus of quality assurance and inflight collaboration efforts.

Problems with Requirements Communication

As we discussed in the previous section, working with outsourcers bring obvious challenges of aligning distributed, third-party resources around project goals. Location challenges alone can introduce time zone and collaboration barriers that can tax productivity and efficiencies. However, with third-party organizations, IT groups can introduce additional challenges that include processes, tools, training, context, domain expertise, and incentives.

Requirements communication fits squarely into the center of this challenge. As we discussed, traditional methods of communicating requirements, which include enumerated lists of features, functional and non-functional requirements, business process diagrams, data-rules, etc., generally are documented in large word-processing or spreadsheet documents. When applied to an outsourced team, this method of communicating creates significant waste and opportunities for failure, as the barrier to understanding is too large to overcome.

Incorrect interpretation and the lack of requirements validation can create artificial (or false) goals which consume valuable outsourcing resources. Due to the nature of software development, these false goals usually manifest themselves into incorrectly implemented code, resulting in costly waste and rework. Outsource providers often treat such rework as “changes”, and bill back these “changes” to the customer. This continues to erode the efficiency that IT organizations strive to achieve when adopting outsourcing in the first place.

Models, Validation and the Requirements Contract

To significantly reduce the probability of ineffective requirements communication through natural language documentation, IT organizations are transitioning to more precise vehicles to communicate requirements.

One of these vehicles is the adoption of the model-based approach to communicate requirements in a highly visual way. Requirements models provide detailed context capture through highly precise data structures. Complete models include the use of universally accepted formats as structural guides, interlinked them together to create a holistic representation of the future system. The formats used in these holistic representations include use-cases for role (or actor) based flows, user-interface screen mockups, data lists, and the linkage of decision-points to business process definitions. These structures augment enumerated lists of functional and nonfunctional requirements.

The benefits of models include the use of simulation to ensure requirements understanding. Simulation is a communication mechanism that walks requirements stakeholders through process, data, and UI flows in linear order to represent how the system should function. Stakeholders have the ability to witness the functionality in rich detail, consuming the information in a structured way that eliminates miscommunication entirely.Models and simulation also provide context for validation. Validation is the process in which stakeholders review each and every requirement in the appropriate sequence, make appropriate comments, and then sign-off to ensure the requirements are accurate, clear, understood, and are feasible to be implemented. Requirements validation can be considered one of the most cost-effective quality control cycles that can be implemented for an outsourcing initiative.

Since requirements are the “blueprint” of the system, outsourced stakeholders can make use of requirements models and simulation during implementation to gain understanding of the goals of the project. Simulation eliminates ambiguity by providing visual representation of goals which in turn eliminates interpretation.

Rich requirements documentation often is a specified deliverable for most IT projects for various reasons that include regulatory compliance (Sarbanes Oxley, HIPAA, etc.), internal procedural specifications, and other internal review cycles.

This documentation also serves as the contract between the customer and outsourced provider. Models can serve as the basis of this documentation and next generation requirements workbench solutions (such as Blueprint Requirements Center) can transform models into rich, custom Microsoft Word documentation. Since these documents are auto-generated, the amount of effort required to build and maintain these documents is minimal

Abstract vs. Detailed: Outsourcer Involvement in Requirements Definition

Outsourcing providers have learned a tremendous amount about how to improve the efficiencies of requirements communication. Many providers are shifting to a much heavier involvement in the process of Requirements Definition. Others continue to operate in a more traditional model, which abstracts them from the requirements definition process, leaving this on the shoulders of the customer.

Western outsourcers have heavily pioneered and practiced an approach that includes efforts to work with customers to articulate, document, and communicate requirements. Part of the value proposition of this approach is that the outsourced provider mitigates the risk of misunderstanding, and ensures that members of the outsourced team gain a clearer understanding of the project goals and deliverables. This approach is often referred to as a detailed approach for requirements definition in outsourced projects.

Indian and European outsourcers largely continue a practice which abstracts outsourced providers from the definition of requirements. Such abstraction means that the customer takes on responsibility to clearly document and articulate requirements to outsourced providers. This requires that the customer partake in extremely accurate and precise specification of project requirements, knowing that cultural, time zone, process, and alignment barriers that exist in the interpretation of these requirements. This approach is often referred to as an abstract approach for requirements definition in outsourced projects.

It is important for an IT organization to understand which of these two approaches are taken, as they can dramatically change the methodologies and practices required to ensure clear understanding of project requirements.

The Solution: A Case Study

The principles described in this paper should be considered and applied at the earliest stages of the project both to set the stage for the work that follows but also because the earlier errors are discovered and resolved, the less expensive the impacts of those errors will be. Just as the cost of errors increases exponentially the later they’re found in the lifecycle, the corollary is also true – to find and deal with them early can result in exponential savings. In the case of outsourced development this should happen even before the outsourced vendor is chosen, during development of the Request for Proposal (RFP).

An example of such a case is provided by Knowsys, a Blueprint partner. Knowsys staff were contracted to come in late to an RFP cycle that had gone awry at a major North American financial institution. This company needed to re-architect their entire e-commerce platform for their Wealth Management business. Significant investment had already been made in the RFP process and when Knowsys arrived, the client was just beginning a series of vendor presentations with senior executives of the client in attendance, summarizing their bid for the outsourcing contract. As the presentations continued, the “elephant in the room” kept getting bigger and bigger. It had become clear that something had gone terribly wrong.

The requirements specified in the RFP to the vendors were incomplete. There was conflicting information and inconsistent levels of detail. Upon further analysis, it was discovered that whole business areas were neglected. Compounding this was the fact that subject matter experts had incorrectly assumed how certain areas of the business functioned. These inaccuracies were further compounded by the various vendors who bid (seven in all) by layering on their own assumptions to fill in the gaps. The result was a series of vendor presentations that were wildly different, almost as if they were trying to address seven different problems and none of them being that of the customer. In addition to uncovering these major flaws in the requirements of the RFP, this event also made obvious that the process for inviting vendors was less than perfect. Some had clearly invested huge amounts of time and effort in the proposal, while others less so. None had sufficient familiarity with the customer’s business or situation to be able to point out obvious flaws.

In effect, the reset button was hit. The executives directed the group, with Knowsys now involved, to redevelop the RFP. This time executive sponsorship was front and center and all aspects of the business were directed to be accessible and support the initiative. All relevant aspects of the business were thoroughly analyzed and their needs amalgamated into a unified representation of the requirements. Validation was performed to ensure coverage, depth, and clarity. Much more rigor was applied to the process of vendor selection for bidding (a contrast from the open invitation used in the first cycle). A smaller group of more focused vendors who knew the client’s business were invited. The Knowsys team also made sure the vendor relationship was far more collaborative, while respecting the impartiality required of bidding process. Theyensured there were multiple points of client-vendor contact and also put measures in place to ensure that any and all assumptions were validated. Finally, an emphasis was also applied to quality assurance and testing aspects (as opposed to a sole focus on the requirements of what was to be built) to produce a much more rounded picture of the bidder’s proposals.

The net result of these initiatives was tremendous. The second set of presentations, by a much smaller group of bidders, was like night and day compared to the previous round. It was clear that each vendor had a very accurate grasp of the client’s problem and goals. Each proposal was compelling and had unique and interesting variations on their proposed solutions.

A vendor was selected and the project got underway, later than hoped due to the failed initial RFP cycle. Everyone felt much more confident entering such an important development initiative with the specifications they now had, the vendor they had selected, and the proposed solution. That confidence was validated when the project, even in the face of unexpected business changes during the project, was delivered on time and on budget with all success criteria being met. Had this financial institution selected a vendor in the first round, and proceeded with development on that basis, the results would undoubtedly have been quite different.

Conclusion

The steady rise in outsourcing of software development has increased in the recent economic climate as companies desperately seek ways to reduce IT costs. The promise of cost savings is realizable, but only to those who focus on three vital requirements control points in the outsourcing arrangement: requirements communication, requirements reference, and requirements validation. Gaining mastery of these through

appropriate processes, practices, and automation will dramatically improve the probability of success of the outsourced engagement, delivering the needed cost savings.

Footnotes

[1] Five trends that challenge technology offshoring in 2009, IT World

[2] Thorny Requirements Issues Handbook 2005 – Process Impact – Karl. E. Weigers.

[3] IAG Requirements Survey, 2007

[4] MetaGroup, Search CIO Top 10 Risks of Offshore World

[5] IDC Analyst, Melinda Ballou, Offshore your Way to ALM, RedmondMag

Matthew Morgan is a 15 year marketing and product professional with a rich legacy of successfully driving multi-million dollar marketing, product, and geographic business expansion efforts. He currently holds the executive position of SVP, Chief Marketing Officer for Blueprint, the global leader in Requirements Lifecycle Acceleration solutions. In this role, he is responsible for strategic marketing, partner relationships, and product management. His past tenure includes almost a decade at Mercury Interactive (which was acquired for $4.5B by HP Software), where he was the Director of Product Marketing for a $740 Million product category including Mercury’s Quality Management and Performance Management products. He holds a Bachelor of Science degree in Computer Science from the University of South Alabama. He holds a Bachelor of Science degree in Computer Science from the University of South Alabama.