Be Expressive – Improve the Clarity of Your Requirements

Click on all images to enlarge

Make Sure Everyone’s on the Same Page

If you were somehow able to hold a requirement in your hand and ask, “Why do I need this? What is its purpose?” I would offer that you’re holding an agreement. A requirement is how two (or more) people have chosen to express their agreement regarding what is needed from a software application. The assumption is that all parties have the same understanding or interpretation of these written words. Quite often this assumption proves to be false – different stakeholders in fact have different ideas in their minds regarding these same words, and confidently think that others share their vision as they warmly smile and shake hands over the agreement. If only we could have a “Vulcan mind-meld” ala Star Trek, a lot of our requirements issues would be gone.

These issues are possible with each and every written requirement. When you multiply this by hundreds, or sometimes thousands, of requirements and then consider how the requirements must combine to paint a picture of the whole solution, it’s quite understandable why there can be so many surprises in the latter parts of a software project.

The fundamental problem is that traditional legal-type textual requirements are simply not very expressive, which tends to leave a lot open to interpretation. Very often, requirements authors attempt to compensate for this lack of clarity by adding even more legal-style text to elaborate and constrain what they’re trying to communicate. Sometimes this can lessen the range of interpretation as desired; but just as often, it can actually do the opposite, as these new words themselves may contain ambiguities, inconsistencies, or redundancies. These additional words are added to areas where misinterpretation has been detected. But what about all the hidden areas of misinterpretation that have yet to be detected? They simply remain hidden, lying in wait to rear their heads later on in the development cycle.

Stakeholders directly involved in defining the requirements through elicitation, authoring, or review, have the benefit of sometimes hours of discussion and debate that go into formulating the requirements. This rudimentary form of Vulcan Mind-Meld is really quite valuable in avoiding misinterpretation of the written requirement. But what of the requirements consumers who did not have a seat at the definition table, and didn’t have the benefit of all this undocumented communication? It is crucial that developers, testers, outsourcers, sub-contractors, and others who consume requirements understand what they are being asked to build, test and manage. The risk of requirements miscommunication among these key individuals is magnified, since they’re even more likely to misinterpret the original intent.

So in addition to serving as an agreement between parties, the requirements have another key purpose: to communicate what is needed completely, precisely, and unambiguously to others who may not have been intimately involved in their definition.

Have you ever noticed what someone does when they’re having difficulty communicating a thought or concept? They become animated. They start using their hands. They make sketches on the whiteboard. They start pointing at the computer screen and say things like “so imagine this”. In short, they reach out for other, more expressive forms of communication. As they do this, they often expose other areas of misconception or misunderstanding. One by one they tackle these new areas, again with various forms of expression. The same is true with requirements. Increasing the expressiveness of your requirements actually exposes additional areas of miscommunication you never knew were there – in other words, areas where you need to be even more expressive. This effect can be very powerful, and can help to raise the quality of requirements dramatically.

Some go so far as to replace textual statements altogether in favor of other more expressive forms of specification. Personally, I’ve found a combination of the two offers the best solution, and this is the approach I’ll describe.

Setting Context

Software requirements express what is required of the application in order to fulfill some higher-level business need. Requirements could describe how to provide customers with an online flight booking capability to fulfill the business need of increased sales for the airline, or how to withdraw cash to fulfill my business need of going to a movie. Regardless, this business need must be expressed along with the associated business process so that this world in which the software application will function is:

- Understood by all team members, and

- Used as a reference for the project to ensure it stays focused on addressing the business need.

This business context can be expressed as a combination of textual statements with a set of business process diagrams. The textual statements can be categorized according to an appropriate taxonomy (e.g. business need, business goal, business objective, business rule, business requirement, etc). There are various taxonomies in use, and I won’t use this forum to debate their merits. Business processes are best expressed using business process diagrams. There are numerous notations in use for this, from fill-blown Business Process Modeling Notation (BPMN), with its plethora of symbols, to very light-weight notations suitable for sketching processes. Since the purpose of the diagrams is to simply provide context for the software requirements – we’re not trying to re-engineer the business – I generally opt for the simpler notations. All I look for is ability to support:

- Roles. In other words, who is performing the activity? This is commonly represented using swimlanes.

- Activities. The tasks performed by the role.

- Decisions. The ability to identify the decisions made by the roles and to specify associated conditions.

- Start & End. Clear notation of where the process begins and ends, with ability to specify any pre or post conditions.

- Nesting. The ability to abstract or “nest” portions of a process and denote it with a special symbol.

- Free Form Notes. The ability to add unrestricted notes (i.e. text of any size and position).

I’ve found with these essentials, I’m able to illustrate most processes, and in the odd case where I can’t, I simply augment with notes.

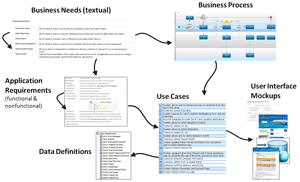

The business need and the process, as you can imagine, are related. In areas where the relationship between the two is noteworthy or illustrative, it makes sense to somehow maintain this relationship via a traceability relationship using whatever requirements tool/platform you have. Conceptually, the context may therefore appear as in Figure 1.

Figure 1 Business Context

Generally, I ensure the business needs are exhaustive in terms of breadth – meaning if there’s a need, I want it to be specified. On the other hand, I selectively apply business process diagrams in areas where the process is business-critical, high-risk, or complex. This is admittedly a fairly simple approach, but I find it adequate in a surprisingly large number of situations. Of course, it can be extended where necessary.

Defining Expressive Requirements

As with the business context, I typically begin with textual statements describing application requirements. I mostly use a categorization scheme and traceability espoused by Rational Unified Process (RUP), which originated at HP, grouping requirements into five main categories: Functional, Usability, Reliability, Performance, and Supportability. Each of these has numerous sub-categories which some people use and others don’t. Another popular approach is that promoted by Karl Weigers in his book Software Requirements. This approach arranges User Requirements, Business Rules, Quality Attributes, System Requirements, Functional Requirements, External Interfaces, and Constraints into a hierarchical traceability strategy. As with categorization of business needs, there are numerous taxonomies for categorizing software requirements, along with many strategies for tracing the relationships among them. You need to decide on one that’s appropriate for you. Regardless, this is simply a way to organize your textual statements, and does little to improve their expressiveness.

Example legal-style text requirements list:

- The system shall allow the user to book a flight

- The system shall allow the user to choose the departure and destination airport

- The system shall allow the user to specify multiple passengers

- The system shall allow the user to specify for each passenger whether they are an adult, senior, or child.

- The system shall allow the user to select either a one-way flight or a round-trip.

One of the most popular ways to improve expressiveness is to think like a user and imagine how they’d use an application. User stories are one way to do this. Another is use cases, which I’ll discuss briefly here.

Instead of a list of capabilities or features to be provided by the application, use cases describe the various scenarios of how the application will be used to accomplish some goal. As an example, imagine an online airline application for booking flights and other common traveler functions. Consider a couple of scenarios:

- Book a round-trip from Dallas to Chicago for two adults

- Book a one-way flight from Toronto to Atlanta for one adult, and one senior

These are different scenarios and will likely entail some different requirements. For example, in the first scenario, we actually have to handle two flights instead of one, because it’s a round-trip. In the second, there may be special requirements for seniors and perhaps senior discounts involved. Furthermore, the second example is an international flight, so there are likely some special requirements around that as well. However, you can look at these two scenarios, and probably think up several more, which are all variants of Booking a Flight, the goal of the user. To describe these various scenarios, we would create a use case called Book a Flight, and within it stipulate in detail how flights get booked. We do this in a dialog style such as …

|

Step 1. User chooses to book a flight Step 2. System allows user to choose one-way or round-trip Step 3. User chooses round-trip Step 4. System allows user to specify departure location and destination Step 5. User chooses departure location and destination Step 6. System allows user to specify number and type of travelers as adult, child, or senior Step 7. Etc. |

Figure 2 Sample Use Case Steps

This is a quite different style than the legal-style text list shown earlier. This dialog describes the same functionality, but from an alternative perspective and style. Just having this alternative vantage point can itself shed new light on the requirements, help communicate what is truly needed, and help expose hidden issues. I would further argue that this form is more expressive than the legal-style text list. First, it is from the perspective of the user, which will allow existing and future end-users to consume it more readily. Further, notice that each statement makes sense only in context of those around it. If you move Step 4 to the end of the list, it will make no sense. In this style, it is quite easy to spot when things are missing or out of place, thereby ensuring completeness and integrity of the requirements.

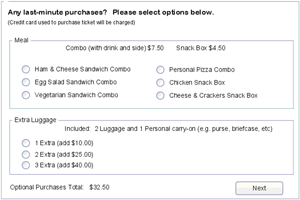

Take a look at the requirements for an application screen below:

- The screen must allow users to select meals for optional purchase

- The screen must allow the user to purchase up to three extra luggage pieces

- The screen must allow for the display of six different meals

- The screen must display the totals of any and all optional purchases

- The user must be able to select and re-select any options on the screen as many times as desired before submitting

- The screen must prominently display the standard luggage allowance

- The screen will inform the user at all times that their credit card used for ticket purchase will be billed for optional meal purchases

- The screen must prominently display the price for meals

These are just a few of the requirements that could be specified for a particular screen. These requirements don’t even get into the various constraints on layouts and appearance that are commonplace when specifying screens.

Also notice the use case steps shown earlier in figure 2. The system steps describe many things that the screen will need to support.

Instead of relying exclusively on interpretation of this written text, what tends to be far more effective is to provide a screen mockup as shown below.

Figure 3 GUI Mockup

While some requirements authors dispense with the written requirement entirely, most generally favor maintaining some combination of textual statements and screen mockups.

A picture definitely is worth a thousand words, and this approach to visualizing what’s needed is indeed quite powerful. Supported by various visual requirements tools available today, there are some very effective approaches in practice. Just a sampling:

- Wireframes: Simple wireframe mockups of user interfaces, like the one above, are fast and easy to create. Their simplicity means that they often can be updated in real-time during a review session. Another good thing about them is that they don’t look like real screens, so people don’t get confused and think that the wireframes represent a finished product. Keeping the fidelity low, at the level of wireframe, also provides silent guidance to the requirements author to stay at the level of screen concept, and not stray into designing the actual screen.

- High Fidelity Screens: More richly defined screens that actually resemble the end product are at times warranted. Sometimes this happens in high-risk or complex areas of the application and often in collaboration with a user interface designer.

- Screen Markups: Very common in situations where you’re enhancing an existing application, taking a screen-shot and applying markups and annotations is an effective way to quickly communicate what’s needed, and to highlight subtle points.

- Screen Overlays: Similarly powerful in situations where you’re enhancing an existing application, Screen Overlays take a screen shot of the current application, and add screen controls that represent the enhancement. Modern requirements tools will provide an editor allowing you to do this, and a simulation engine allowing those overlays to become live during a requirements simulation.

- System Interfaces: In areas where there’s no user interface, there’s still room for visualizations. Event or sequence diagrams are excellent visuals for illustrating behavior of a system interface.

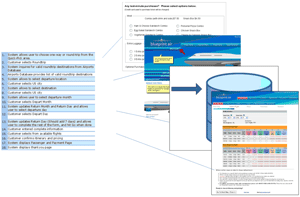

Integrate different forms of expression

The use cases discussed earlier provide a narrative description of how the user interacts with the system each step of the way. In addition, the visualizations mentioned above illustrate what the user will actually see along the way. It only makes sense that these be integrated. While this could be accomplished manually by perhaps creating links between your use case steps and the visuals with office-automation applications, a far more effective solution is to use a modern requirements definition tool that supports this linkage, and provides other associated benefits. The most powerful of these tools allows you to have a mixture of visualization approaches associated with the use cases, as illustrated in the picture below:

Bring Data into the Picture

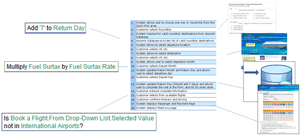

Data is an inherent part of any application. In some systems, it’s not very dominant; in other data-intensive applications, data occupies most of the mind-share. By adding visuals (GUI mockups) to the steps in a use case, we were effectively illustrating how the user interface is affected by actions of the user (use case steps). Data is similarly influenced by actions of the user, and this behavior should be specified as well as part of the requirements.

With things like user screens, you can specify them entirely using text or use visualizations, as discussed earlier. This of course isn’t new since many make sketches or mockups outside of the requirements definition process today, using a variety of graphics programs. One of the key values in what was discussed is making these visualizations an inherent part of the requirements, and associating them with the discrete steps of the use cases at points where they’d appear to the user.

Something very similar is possible with data. You could choose to express data elements only using textual statements or tables. Today, outside of the requirements, many create prototypes with various tools like Microsoft Excel to actually perform data operations and evaluate results. This allows requirements authors not only to validate their data requirements, but also to communicate them more effectively. As with visualizations, modern requirements toolsets allow you to bring data operations within the realm of the requirements and, like the visualizations, associate them with specific steps of the use cases where they will be performed. Below shows conceptually where data operations are being associated with use case steps, similar to how the visualizations were earlier.

Figure 4 Data Incorporated in a Use Case

By adding visuals to the steps, we’re able to illustrate how user interfaces presented by the system are influenced by user actions. Similarly, by adding data operations to steps, we’re able to show not only how data is influenced by user actions, but also how data can influence system behavior.

Pull it Together

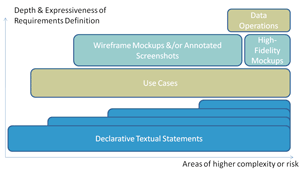

So there are multiple ways you can express requirements. Of these, textual lists are typically the least expressive. As discussed earlier, I tend to use these alternate forms to augment rather than replace text lists. However, I typically don’t use them on all parts of the application. One criterion that tends to guide how I express requirements is requirements complexity; successfully communicating highly complex requirements demands these more expressive forms. Another criterion is risk. If the risk associated with getting the requirements wrong in a certain area of the application is particularly severe, I want to be as expressive as possible with the requirements, thereby reducing the possibility of miscommunication. The graph below provides an example of this:

While all areas of the application are covered by textual requirements at a high-level, I go to the greatest level of detail in those areas that are complex and risky. The functionality of virtually all areas would be illustrated with use cases. Most of those would have wireframe mockups, with a very few, the highest risk and most complex areas, having higher-fidelity renderings. Also in these important areas, I would leverage data operations on the use case steps. In summary, the highest risk/complex areas are specified to the greatest level of detail and in the most expressive manner, while the lowest risk/simple areas are specified much more simply.

Even though it is possible to do this manually with spreadsheets, drawing tools, and other point-solutions, this is where an integrated requirements workbench really shines in its ability to not only support all these forms of expression, but also to maintain requirements integrity, ensure consistency across them, and manage traceability. The diagram below gives a high-level overview of a traceability strategy for this information, including traceability back to the business context. Tracing back to business reference is vital to ensure that all business needs are being addressed. Conversely, a complete traceability strategy ensures that every application functionality that is being specified has an explicit business need.

Using Expressive Requirements

Defining expressive requirements can dramatically improve the outcome of software projects if only in the uncovering of latent errors early and a reduction in miscommunication. Expressive requirements can release even more value if modern requirements toolsets are leveraged. The following are just a few examples of the benefits you can derive by using a modern requirements toolset.

Generate Documents. A requirements workbench will allow you to generate requirements documents automatically from the information in the requirements model. This can be a significant shift for a more traditional requirements organization in that time is spent focused on the requirements themselves, making them more expressive, analyzing them and improving their quality as opposed to being focused on the mechanical tasks of producing and maintaining a document. You still get the document, just without the effort.

Generate Simulations. A requirements workbench will allow you to transform a requirements model into an animated, live simulation. Simulations with expressive requirements make reviews far more effective, quite frankly, enjoyable. The simulations will leverage all the content in the model including the textual requirements, the use cases, the visualizations, and the data, integrating them into a single “vision” of the future application. Traceability is a vital part of the review process. When reviewing requirements, you must be able to relate them back to the original business need on-demand. Comprehensive requirements workbenches available today expose this traceability not only during requirements authoring tasks, but also during the simulations used for analysis and review.

Comments, feedback, discussions often result from simulation sessions. These are useful exchanges of ideas that also need to be recorded and managed such that they’re not missed, that they’re accessible, and that you can always refer back to them. A modern requirements workbench will record this informal input alongside the comments during simulation and make it accessible throughout the workbench so people are aware of these discussions and so authors can effect change based on them.

Generate Tests. A requirements workbench will allow you to automatically generate tests from the requirements defined in the model. These tests will cover all possible usage scenarios throughout the model and express for each step in the test any relevant screens, data, or externally referenced materials.

The importance of traceability continues through to the tests as well. Each test generated has complete traceability information in its header that identifies which requirements it helps to prove. As I’m sure you can imagine, this ability to generate tests is hugely valuable to the QA professionals on a software project.

Integration with Development and Test Tools. Practitioners, such as developers and testers who consume the requirements, base their work products ultimately on the requirements. It’s therefore very important that the requirements information be available to their toolsets as well. Today’s requirements workbench will integrate with popular UML design toolsets as well as QA testing toolsets to provide them with high-quality, expressive requirements content.

Managing Requirements Change

With more expressive requirements, most change will occur at the beginning of the project when alterations are inexpensive. The modern requirements workbench provides several capabilities to help manage change. Some of these include:

- Traceability: As discussed at several points earlier, the modern requirements workbench supports traceability among all requirements artifacts, provides mechanisms to create relationships that are fast and easy, makes traceability information available as you work, and provides sophisticated and filterable views into traceability for deeper analysis. Traceability is very important for analyzing impact as part of requirements change.

- Versioning: The modern requirements workbench will version all requirements elements such that you can always go back in time to see them as they existed at points in the past.

- History: The modern requirements workbench will provide a comprehensive historical record detailing who changed what, and when they did it.

- Difference: The modern requirements workbench will allow you to select any two versions and instantly see the precise differences between them, for all requirements elements regardless of the form of expression.

Summary

The key problem for software requirements historically has been less-than-perfect requirements. One of the main reasons for poor quality requirements is miscommunication of requirements, owing to a lack of requirements expressiveness. Multiple forms of expression for requirements can remove this miscommunication. At the same times, integrating these multiple forms in a single unified model allows them to be manageable and scalable. The modern requirements workbench makes this approach for practical, expressive requirements possible.

Don’t forget to leave your comments below

Tony Higgins is Vice-President of Product Marketing for Blueprint, the leading provider of requirements definition solutions for the business analyst. Named a “Cool Vendor” in Application Development by leading analyst firm Gartner, and the winner of the Jolt Excellence Award in Design and Modeling, Blueprint aligns business and IT teams by delivering the industry’s leading requirements suite designed specifically for the business analyst. Tony can be reached at [email protected].