Business Analysis Benchmark

How Business Requirements Impact the Success of Technology Projects

The Business Analysis Benchmark report, conducted by IAG Consulting in late 2008, presents the findings from surveys of over 100 companies and definitive statistics on the importance and impact of business requirements on enterprise success with technology projects. The survey focused on larger companies and looked at development projects in excess of $250,000 where significant new functionality was delivered to the organization. The average project size was $3 million.

The study has three major sections:

1 Assessing the Impact of Poor Business Requirements on Companies: Quantifying the cost of poor requirements.

2 Diagnosing Requirements Failure: A benchmark of the current capability of organizations in doing business requirements and an assessment of the underlying causes of poor quality requirements

3 Tactics for Tomorrow: Specific steps to make immediate organizational improvement.

In addition to the full text report, these sections have also been published as stand-alone white papers for ease of use. All can be accessed from www.iag.biz.

The study provides a comprehensive analysis of business requirements quality in the industry and the levers for making effective change. The following issues are addressed in the report:

- the financial impact of poor quality requirements;

- the information needed to identify underlying issues critical to success; and,

- the data necessary to target specific recommendations designed to yield performance improvement.

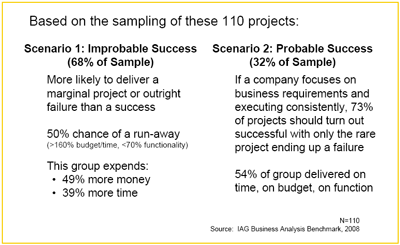

The report finds two basic scenarios for companies:

Scenario 1: Project success is ‘Improbable’. Companies might be successful on projects, – but not by design. Based on the competencies present, these companies are statistically unlikely to have a successful project. 68% of companies fit this scenario.

Scenario 2: Project success is ‘Probable’. Companies where success can be expected due to the superior business requirements processes, technologies, and competencies of people in the organization. 32% of companies fit this scenario.

Almost everyone understands that requirements are important to project success. The data above demonstrates that while people understand the issue, they did not take effective action in almost 70% of strategic projects.

Effective Business Requirements are a process – not a deliverable. The findings are very clear in this regard – companies that focus on both the process and the deliverables of requirements are far more successful than those that only focus on the documentation quality. Documentation quality can only assure that investment in a project is not wasted by an outright failure. The quality of the process through which documentation is developed is what creates both successes and economic advantage. To make effective change, companies must rethink their process of business requirements discovery.

The following are a few key findings and data from the study:

1 Companies with poor business analysis capability will have three times as many project failures as successes.

2 Sixty-eight percent of companies are more likely to have a marginal project or outright failure than a success due to the way they approach business analysis. In fact, 50% of this group’s projects were “runaways” which had any two of:

- Taking over 180% of target time to deliver.

- Consuming in excess of 160% of estimated budget.

- Delivering under 70% of the target required functionality.

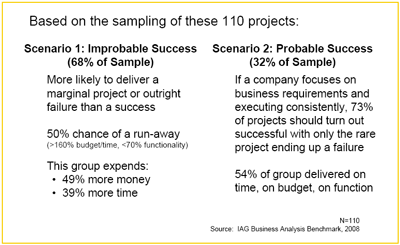

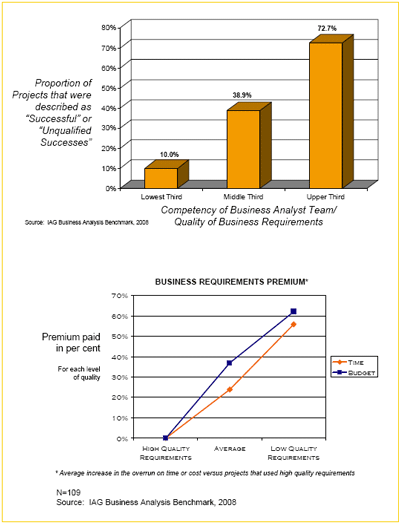

3 Companies pay a premium of as much as 60% on time and budget when they use poor requirements practices on their projects.

4 Over 41% of the IT development budget for software, staff and external professional services will be consumed by poor requirements at the average company using average analysts versus the optimal organization.

5 The vast majority of projects surveyed did not utilize sufficient business analysis skill to consistently bring projects in on time and budget. The level of competency required is higher than that employed within projects for 70% of the companies surveyed.

Almost 70% of companies surveyed set themselves up for both failure and significantly higher cost in their use of poor requirements practices. It is statistically improbable that companies which use poor requirements practices will consistently bring projects in on time and on budget. Executives should not accept apathy surrounding poor project performance – companies can, and do, achieve over 80% success rates and can bring the majority of strategic projects in on time and on budget through the adoption of superior requirements practices.

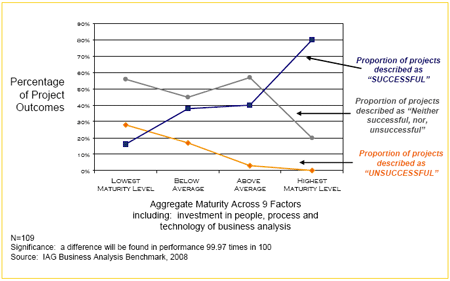

Making Organizational Improvement

The survey findings made it clear that there is no single silver bullet for making organizational improvement. CIOs must look at making improvement across all the areas of people, process, and tools used to support processes to gain organizational improvement. Only a systematic change to all areas of people, process and enabling tools yields material improvement. 80% of projects from the companies which had made these broad-based changes had successful projects.

The findings of the Business Analyst Benchmark describe both the poor state of the majority of companies surveyed, and, a path to performance improvement. To assist the reader, this report also analyzes the data for alternative actions which could be taken to make improvement in order to separate myth from actions that create benefit. The top three findings in this area can be used to change business results:

- 80% of projects where successful from companies with mature requirements process, technology and competencies.

- Auditing three specific characteristics of business requirements documentation and forcing failing projects to redo requirements will eliminate the vast majority of IT development project failures.

- Elite requirements elicitation skills can be used to change success probabilities on projects.

The above survey findings and the underlying statistics are described in detail within the report.

Overall, the vast majority of companies are poor at both, establishing business requirements and delivering on-time, on-budget performance of IT projects. Satisfaction with IT and technology projects wanes significantly as the quality of requirements elicitation drops. This report is both a benchmark of the current state of business analysis capability and a roadmap for success.

The Bottom Line

The challenges in making quantum improvement in business analysis capability should not be underestimated.

Organizations understand conceptually that requirements are important, but in the majority of cases they do not internalize this understanding and change their behavior as a result. The most successful of companies do not view requirements as a document which either existed or did not at the beginning of a project; they view it as a process of requirements discovery. Only companies that focus on both the process and the deliverables are consistently successful at changing project success rates.

For companies that have made the leap to the use of elite facilitation skills and solid process in requirements discovery, there are significant benefits. Not only were these projects rarely unsuccessful, they were delivered with far fewer budget overruns and in far less time.

The report describes the use of poor requirements process as debilitating. Using this word is perhaps unfair, since companies do not collapse as a result of poor quality analysis. In fact, IT organizations and the stakeholders involved will overcompensate through heroic actions to attempt to deliver solid and satisfactory results. However, ‘debilitating’ is an accurate word to describe the cumulative effect of years of sub-optimal performance in requirements analysis when results are compared to competitors who are optimal. Even leaving out the effect of high failure rates and poorer satisfaction with results, the capital investment in information technology of the companies with poor requirements practices is simply far less efficient than companies that use best requirements practices.

To illustrate this inefficiency of capital expenditure on technology at companies with poor requirements practices, use the average project in this study ($3 million):

- The companies using best requirements practices will estimate a project at $3 million and better than half the time will spend $3 million on that project. Including all failures, scope creep, and mistakes across the entire portfolio of projects, this group will spend, on average, $3.63 million per project.

- The companies using poor requirements practices will estimate a project at $3 million and will be on budget less than 20% of the time. Fifty percent of the time, the overrun on the project both in time and budget will be massive. Across the entire portfolio of successes and failures, the company with poor requirements practices will (on average) pay $5.87 million per project.

The average company in this study using poor requirements practices paid $2.24 million more than the company using best practices.

If overruns are common at your company, or if stakeholders have not been satisfied with more than five of the last ten larger strategic projects, there is definitely a problem and your company is likely paying the full poor requirements premium on every project.

Keith Ellis is the Vice President, Marketing at IAG Consulting (www.iag.biz) where he leads the marketing and strategic alliances efforts of this global leader in business requirements discovery and management. Keith is a veteran of the technology services business and founder of the business analysis company Digital Mosaic which was sold to IAG in 2007. Keith’s former lives have included leading the consulting and services research efforts of the technology trend watcher International Data Corporation in Canada, and the marketing strategy of the global outsourcer CGI in the financial services sector.