I Dont Have Time to Manage Requirements; My Project is Late Already!

An Overview

For those of us who have been given imposed deadlines that often seem arbitrary and unreasonable, managing requirements is one of the last things we want to do on a project. We worry about getting the product built and tested as best as we can. And we feel fortunate to gather any requirements at all. However the lack of a well-managed requirements process can lead to common project issues, such as scope creep, cost overruns, and products that are not used. Yet many project professionals skim over this important part of the project and rush to design and build the end product.

This is the first in a series of articles providing an overview of requirements management. It emphasizes that the discipline of requirements management dovetails with that of project management, and discusses the relationship between the two disciplines. It describes the requirements framework and associated knowledge areas. In addition, it details the activities in requirements planning, describes components of a Requirements Management Plan, and explains how to negotiate for the use of requirements management tools, such as the Requirements Traceability Matrix to “get the project done on time.”

The Case Against Requirements Management

It is not uncommon to hear project professionals and team members rationalize about why requirements management is not necessary. We commonly hear these types of statements:

-

“I don’t have time to manage their requirements. I’m feeling enough pressure to get the project done by the deadline, which has already been dictated. My team needs to get going quickly if we have a hope of meeting the date.”

-

“Our business customers don’t fully understand their requirements. We could spend months spinning our wheels without nailing them down. I doubt if all the details will emerge until after we implement!”

-

“My clients are not available. I schedule meetings only to have them cancelled at the last minute. They don’t have time. They’re too busy on their own work to spend time in requirements meetings. And my clients, the good ones, are working on other projects, their day-to-day jobs, fighting fires, and their own issues.”

-

“Managing requirements, when they’re just going to change, is a waste of time and resources. It creates a bureaucracy. It uses resources that could be more productive on other tasks, such as actually getting the project done!”

-

“They don’t think we’re productive unless we’re building the end product. They don’t want to pay for us to spend a lot of time in requirements meetings or doing paperwork!”

For many requirements management equates to bureaucracy and “paperwork,” because the discipline has not yet stabilized and evolved. The International Institute of Business Analysis (IIBA) in their body of knowledge, the Business Analysis Body of Knowledge (BABOK), establishes a requirements framework for the discipline of requirements management.

This series of articles discusses requirements management, emphasizing the planning and the requirements management plan.

Requirements Management Overview

The Project Management Institute (PMI, 2004, p 111), the International Institute of Business Analysis (IIBA, 2006, p 9) and the Institute for Electrical and Electronics Engineers (IEEE, 1990, Standard 610) all define a requirement as a condition or capability needed to solve a problem or achieve an objective that must be met by a system or system component to satisfy a contract, standard, or specification.

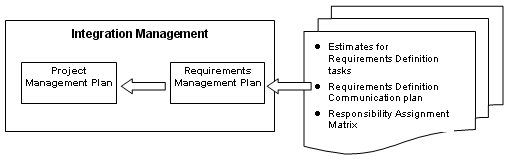

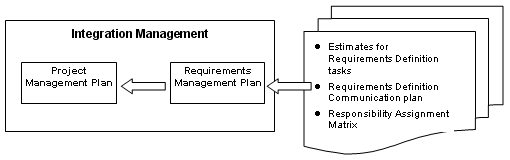

Requirements management includes the planning, monitoring, analyzing, communicating, and managing of those requirements. The output from requirements management is a requirements management plan, which on large projects can be a formal set of documents with many subsidiary plans. Examples of subsidiary documents are a business analysis communication plan, metrics for measuring business analysis work, key deliverables and estimates for the business analysis work effort, and many more. On smaller efforts this requirements management plan can be an informal roadmap. In either case, it is subsidiary to the overall project management plan, created, executed, controlled, and updated by the project manager.

Below is an exhibit showing the relationship between the requirements management plan and example set of plans that are subsidiary to the integrated project management plan. As indicated, the requirements management plan is incorporated into the project management plan, and all the subsidiary requirements management plans are incorporated into the subsidiary plans, within the overall project management plan. We will have a deeper look at requirements planning later in this series.

Exhibit 1: The Requirements Management Plan in Relation to Project Management

If care isn’t given to planning business analysis activities, the entire project could go awry. Lack of requirements management is one of the biggest reasons why 60% of project defects are due to requirements and almost half of the project budget is spent reworking requirements defects. (Software Engineering Institute (SEI’s) Square Project updated 5/12/05).

In the upcoming articles of the series, we’re going to focus on requirements planning. But, it is also important to understand the overall requirements management framework, which is based on the BABOK. The remaining parts of the series includes: Part II – BABOK Overview, III-Requirements Planning, IV-The “Right Amount” of Requirements Management.

Elizabeth Larson, CBAP, PMP and Richard Larson, CBAP, PMP are Principals, Watermark Learning, Inc. Watermark Learning helps improve project success with outstanding project management and business analysis training and mentoring. We foster results through our unique blend of industry best practices, a practical approach, and an engaging delivery. We convey retainable real-world skills, to motivate and enhance staff performance, adding up to enduring results. With our academic partner, Auburn University, Watermark Learning provides Masters Certificate Programs to help organizations be more productive, and assist individuals in their professional growth. Watermark is a PMI Global Registered Education Provider, and an IIBA Endorsed Education Provider. Our CBAP Certification Preparation class has helped several people already pass the CBAP exam. For more information, contact us at 800-646-9362, or visit us at http://www.watermarklearning.com/.

processes as well as the new assignment

processes as well as the new assignment

More interesting and informative articles and blogs again, this month! A couple of new articles, one of which is the first in a new four-part series. Our bloggers are back and there are a couple of news items in our IIBA section we’re sure will get your attention.

More interesting and informative articles and blogs again, this month! A couple of new articles, one of which is the first in a new four-part series. Our bloggers are back and there are a couple of news items in our IIBA section we’re sure will get your attention.