How to Handle Tight Deadlines as a Business Analyst

When you are assigned a complex project that has a short timeframe (as often happens), it can be nerve wracking – I know this from experience. It’s like driving a racing car – you have to push close to the limits but any error can throw you completely off the track.

When you are assigned a complex project that has a short timeframe (as often happens), it can be nerve wracking – I know this from experience. It’s like driving a racing car – you have to push close to the limits but any error can throw you completely off the track.

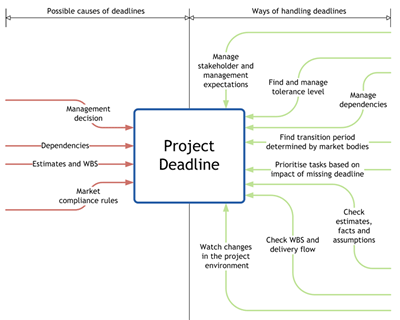

The first thing that I do when I get a project like that is considering the reasons for the deadline. You can end up with a tight deadline for a variety of reasons. The deadline may be mandated by the management. It can be determined by interdependencies between projects. It can be defined by market compliance rules. In other cases it’s estimated using the work breakdown structure for the project but ends up being too short because of wrong assumptions.

So how can you as a business analyst make sure that circumstances don’t control you and your team, and you deliver your project successfully? Keep in mind that sometimes you can be successful even if you don’t meet the original deadline!

Here is a visual summary of deadline causes and ways of handling them:

Let’s look into the ways of handling deadlines in more detail.

Manage the Manager

Management sometimes sets up deadlines with a good “buffer” to allow themselves time for decision making at the end of the project, or because they don’t expect to get results on time and want to push things along by moving the deadline forward. This can be best mitigated by having good communication with the management.

However, what can you do if your manager does set an unreasonably short deadline? Find the tolerance level of your project sponsor (management) and of the key stakeholders who can influence the sponsor. Talk to end users to understand the severity of not delivering on time. There can be scope for negotiation.

Shuffle Dependencies

If your deadline is constrained by dependencies, you can talk to project managers of the upstream and downstream projects to get a better understanding of the interconnections. You might be able to find a way to reorganise things and either get what your project needs delivered earlier, or move the deadline for your own project.

Be Smart About Compliance Deadlines

If you’re working on a compliance project, it may have a firm deadline established by the market bodies. In this case find out if there is a transition period. It is usually provided because market participants have differing levels of compliance and don’t have the same resources available to make the transition. You can also evaluate the impact of breaching the deadline (such as fines for non-compliance), and prioritise the parts of the project which would have the highest financial impact if they are overdue.

Double Check Estimates

When it comes to deadlines defined by estimation, it’s a good idea to double check the estimates. Ask what facts and assumptions were taken into account when the task was initially estimated.

Watch Out For Changes

Once the project starts, you have to watch out for changes in the project environment. Changes will affect project completion time, so work with the team and stakeholders to update the WBS and the schedule.

Organising Work On Projects with Fixed Deadlines

After you’ve applied the practices above and you are sure that you’ve done everything you can to negotiate a reasonable deadline for your project, the next phase is organising your work in the best possible way to meet the deadline.

I’ve found that the following practices help me complete projects on time:

- Determine the business context which will be affected by the change to status quo

- Define the scope of the solution required to satisfy the identified business need

- Plan short iterations to verify the project direction

- Align the solution with the existing business processes and IT infrastructure

Each of these practices is discussed in more detail below.

Determine Business Context

Completing this step successfully often determines the success or failure of the project.

Many organizations that operate in a competitive environment have well defined and standardized processes. Many others don’t however, so be prepared to discover them. Explore the business processes which may be affected by the new solution. Learn which systems are used by the business within these processes. Embedding new solutions into these business landscapes should be considered thoroughly to reduce resistance to changes and exclude redundancy in project management, solution delivery and transition to the new state. If done right, it also gives a business analyst an opportunity to find ways to add value to the business.

The rationale for the project should be identified by the project manager, while the business analyst should identify business drivers and actual business needs.

Define Solution Scope

This is the exciting part of the project, but defining solution scope has never been an easy task. Short timeframes and technological changes which may occur during your project make it even more challenging.

In general, to cope with this task the project team needs a solid foundation to build on – well documented processes and good infrastructure. Knowing and using best industry practices can often point you towards defining a sustainable solution and save exploration and research time.

When it comes to defining solution scope, my approach is to use only the “must” requirements for the “initial” solution, and prioritise the remaining “should” requirements into subsequent phased releases (“final” solution).

You must work closely with the solution architect and play an active role in exploring available options. Often the overlap between business analysis and system architecture saves a lot of time – I have saved up to a third of project time by ensuring that the architect could use my documents as a useful starting point in producing a detailed design of the solution.

Plan Short Iterations

I’m still on the fence with regards to the Agile method. Its value is clear in software development (at least for certain kinds of projects) but when it comes to business analysis, I’m not so sure. However, short iterations are one useful technique in Agile which can reduce project time. Use them to get a summary of the completed and outstanding tasks, evaluate changes to the project scope, and identify feasible shortcuts.

Project manager and business analyst need to present a unified front in dealing with business stakeholders. Face to face communication is essential to make short iterations work for analysing the current situation, required changes and making decisions on the next steps. Informal communication style helps too – really, there’s just no time for strict formalities if you want to get things done. It’s very important for the project manager to arrange a “green corridor” for access to authorities and clear the way for the team to focus on delivering rather than struggling with bureaucracy.

As a business analyst, you also have to do your share to deliver results quickly by being professional and active in all your activities (industry research, compliance requirements and so on). Make sure that communication is well maintained between everyone involved in the project.

Align Business and IT Infrastructure

Most of the time new solutions are embedded into the existing environment. It’s a good idea to make maximum use of the existing components and processes to make the introduction of the new solution less intrusive and to minimise the number of temporary patches and business interruptions.

I try to present the solution in terms of interacting services to achieve this. I transform business references to applications and systems into services and show how they could interact. This approach allows me to show the business users how all pieces of the business, including external parties and outsourced services, come together and how the new solution will improve overall efficiency.

Conclusion

Whenever you get a project with a short deadline, don’t forget that there are two major considerations: what can be done to change the deadline, and what is the best way to organise your work to meet the deadline.

The practices presented in this article should help you address each of these considerations.

Don’t Forget to Leave Your Comments Below

Sergey Korban is the Business Analysis Expert at Aotea Studios, publisher of innovative visual learning resources for business analysts. We think business analysis should be easy to learn! We deliver practical knowledge visually, with a minimum of text, because that’s an efficient way to learn. Find out more at http://aoteastudios.com

People often ask me “how do we know when we are done with the requirements phase?” My opinion? You are never completely done with requirements but criteria do exist by which you can assess the completeness of your requirements and gauge your readiness to proceed with design.

People often ask me “how do we know when we are done with the requirements phase?” My opinion? You are never completely done with requirements but criteria do exist by which you can assess the completeness of your requirements and gauge your readiness to proceed with design.