Introduction

Introduction

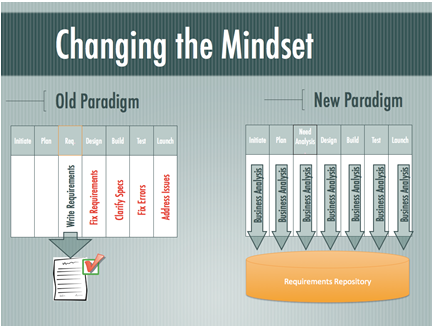

This article is inspired by two more of Alistair Cockburn’s “gold nuggets” found in his book Writing Effective Use Cases [1]. The first nugget is the idea of a goals hierarchy, which represents, among other things, how the goals of a system’s use cases are derived from and trace back to larger goals of the system’s users. The second nugget is his “striped trousers” explanation of use case scenarios, which are sequences of use case steps that represent different paths through a use case in pursuit of the use case’s goal. This article also demonstrates a convention for organizing a use case’s steps based on the established approach of writing use case steps nonredundantly by using flows of different kinds. It even introduces a new kind of flow and advocates its use over that of the extension use case in specific circumstances.

Goal hierarchies

Cockburn provides a mental model of how people and organizations function on the basis of a hierarchy of goals, where each goal, but at its highest-level belongs to one or more higher-level goals and each goal, but at its lowest-level, breaks down into multiple lower-level goals.

- Moving down the hierarchy answers “How?” to show how a certain goal can be achieved.

- Moving up the hierarchy answers “Why?” and provides a rationale for why a certain goal exists.

These goals are pursued by different role players (people), organizations (groups of people) and systems (automated resources) inside and outside an organization.

Use case goals

A use case arises when a person (or a system) with an overall goal needs to interact with a supporting system in order to achieve a mini goal as a step towards reaching the overall goal. The use case represents the supporting system’s ability to deliver the user’s mini goal, and this it the use case’s overall goal. This can be reflected no more clearly than by naming the use case after that goal. The use case’s specification details how the use case’s overall goal is broken down into its own mini goals, represented by steps and groups of steps.

Thus, the user’s overall goal, the user’s mini goal/use case’s overall goal, and the use case’s mini goals are seamlessly incorporated into an organization’s goals hierarchy.

The beauty of this perspective is that:

- System use cases arise from and trace back to the goals of a system’s users, rather than a use case modeler’s imagination.

- The idea of a goals hierarchy that integrates the goals of groups of people with the goals of people, the goals of people with the goals of supporting systems, and the goals of supporting systems with the goals of their internal steps provides a single paradigm for how an organization functions across all of its levels in both manual and automated ways.

Use case scenarios

A use case scenario is a sequence of steps that represents a single use case execution (a scenario is a possible path through a use case specification).

Cockburn presents a diagram (Figure 2.2 in [1]), whose originality and quirkiness are only exceeded by its effectiveness. He calls it the “striped trousers” view of a use case and its scenarios. In it:

- The belt represents “the [use case] goal that holds all the scenarios together.”

- One leg “is for the scenarios that end in success.”

- The other leg “is for the scenarios that end in failure.”

- “Each stripe corresponds to a scenario.”

- “The first stripe on the success leg [is] the main success scenario.”

- “The leg’s remaining stripes are [all] other scenarios that ultimately end in success – some through alternate success paths and some after recovering from an intermediate failure.”

- The stripes on the failure leg are scenarios that encounter one or more intermediate failures, possibly recover from some, but always fail eventually.

As Cockburn says, this model “is useful for keeping in mind that every use case has two exits, that the [initiating] actor’s goal binds all the scenarios, and that every scenario is a simple description of the [use case] succeeding or failing.”

This strikes me as one of the greatest contributions to use case modeling.

Use case flows

Cockburn points out, “We won’t actually write every scenario separately from top to bottom. That is a poor strategy because it is tedious, redundant, and hard to maintain.”

Instead, a use case can be written nonredundantly, starting with an unconditional main flow that is subsequently enhanced with conditional additional flows of different kinds, where a flow is a sequence of steps. The main flow equates to “the main success scenario” and each additional flow represents a portion of one or more of the remaining scenarios.

The UML doesn’t deal with flows. Cockburn only refers to “the main success scenario” (main flow) and “extensions” (additional flows). Refining this view, the following sections present five different kinds of flow by providing a definition, diagram and simplified example for each. The purpose of the examples is to illustrate a clear, consistent and scalable writing convention for representing a use case’s structure (they’re not meant as full-fledged use case specifications).

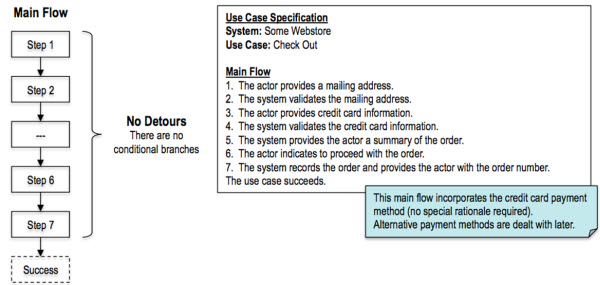

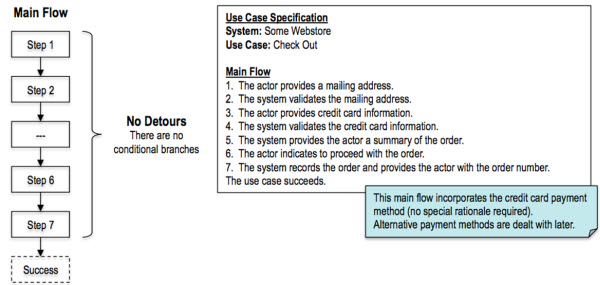

The main flow

Definition: An unconditional set of steps that describe how the use case goal can be achieved and all related stakeholder interests can be satisfied. Each step is essential to achieving the use case goal (no step can be skipped), and each step succeeds.

Cockburn calls this “the main success scenario,” and others use the terms “the happy path,” “the basic flow” and “the normal course of events.” My preference is for “the main flow” because it’s short and ties in well with the names of the other kinds of flow.

The next four sections outline what collectively can be called additional flows.

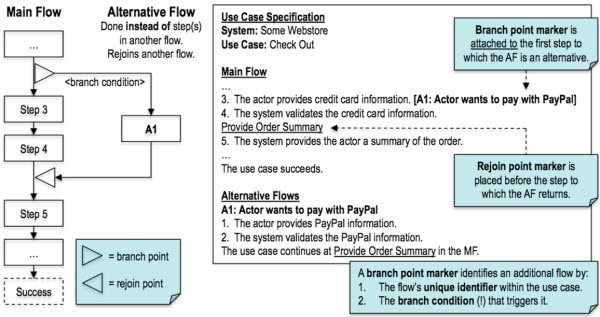

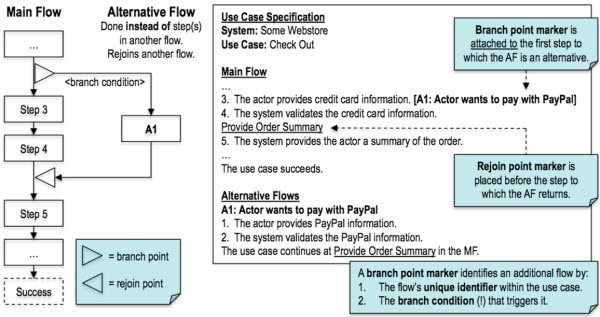

An alternative flow

Definition: A conditional set of steps that are an alternative to one or more steps in another flow (the alternative flow is performed instead of the other step or steps), after which the use case continues to pursue its goal.

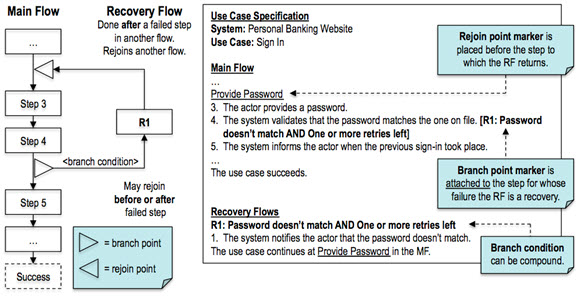

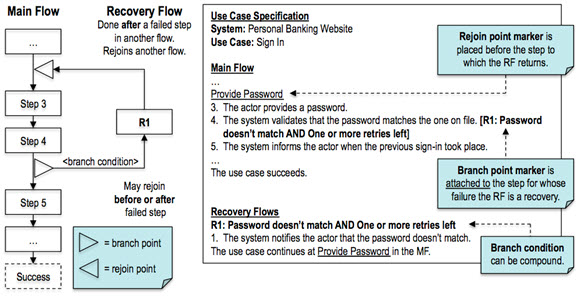

A recovery flow

Definition: A conditional set of steps that are a response to the failure of a step in another flow (the recovery flow is performed after the other step), after which the use case continues to pursue its goal.

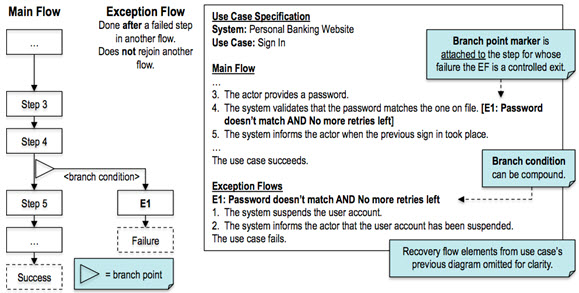

An exception flow

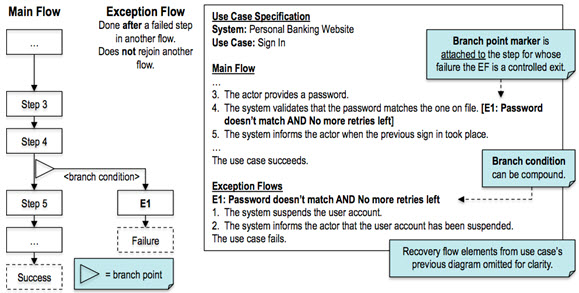

Definition: A conditional set of steps that are a response to the failure of a step in another flow (the exception flow is performed after the other step), after which the use case abandons the pursuit of its goal.

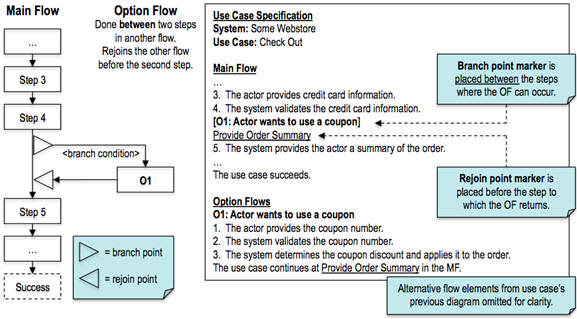

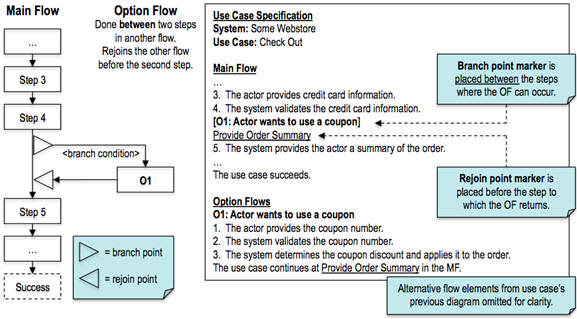

An option flow

Definition: A conditional set of steps that represent a nonessential option available between two steps in another flow (the option flow is performed between the two steps), after which the use case continues with the second of those steps.

The option flow’s main purpose is to represent behavior that is nonessential to achieving the use case goal (the behavior can be skipped) but that still contributes to that goal in some way (the behavior only has meaning in the context of that goal).

An “option” is like an optional feature in a new car (e.g., sun roof). Choosing the option contributes to the goal (order a car), but you can order a car with or without the option (a sun roof is not essential). Continuing the analogy for another term in this article, a car’s possible engine sizes beyond the standard size are “alternatives” to the standard size (but having an engine of some size is essential).

On point markers

Before taking a closer look at the option flow, here are some comments about branch point markers and rejoin point markers.

Advantages.

- There is no need to reference actual step numbers (the convention used in [1]); these change when steps are added, deleted or reordered, and if we forget to update their references then the use case becomes corrupted.

- A flow’s branch points and rejoin points are immediately obvious from the flow’s own description, rather than only from the descriptions of the additional flows that branch off the flow (the convention used in [1]).

Placement of branch point markers.

- As the examples show, a branch point marker for an alternative, recovery or exception flow is attached to a main flow step, rather than placed before or after the step; this keeps markers from visually dominating the main flow.

- This doesn’t apply to the option flow, which by its very nature occurs between two steps.

More on the option flow

What an option flow is not

An option flow is not:

- Part of the main flow, because an option flow is conditional and nonessential to achieving the use case goal, which is the very opposite of the main flow.

- An alternative flow, because an option flow isn’t an alternative to a step or steps.

- A recovery flow, because an option flow is not a response to a failed step.

- An exception flow, because an option flow is not a response to a failed step and doesn’t mean the use case failed.

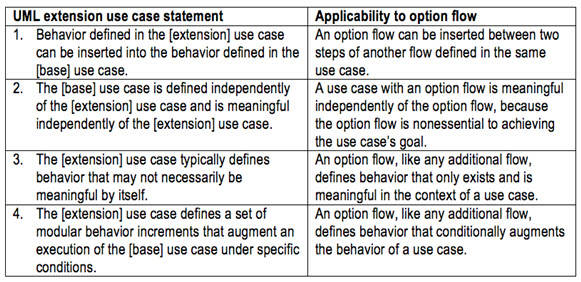

Use an extension use case instead?

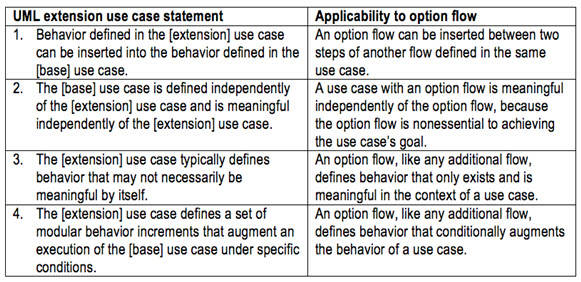

You may wonder whether nonessential goal-related behavior can be represented as an extension use case instead, given the UML’s extension use case description in [2]. To help answer that, the next table lists key UML statements about the extension use case and their applicability to the option flow.

At first blush, the above, and in particular its first two statements, suggests it’s reasonable to represent nonessential goal-related behavior as an extension use case. However, for a conclusive answer, we turn to the extension use case interpretation outlined in [3].

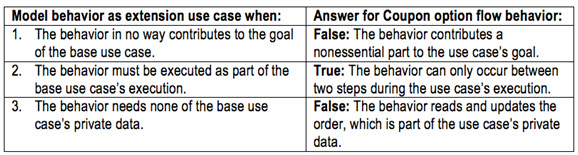

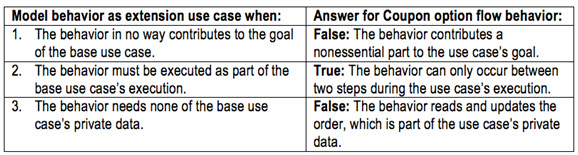

When to use an option flow and when to use an extension use case

Based on [3], the next table repeats when behavior can be modeled as an extension use case and gives answers for the behavior of the earlier Coupon option flow.

Regarding the False answers for the Coupon option flow behavior:

- The third answer is soft: the order could be declared public data, which would change the answer to True.

- The first answer is hard: it is and remains a fact that the Coupon option flow behavior contributes to the use case goal, so the answer is always False.

This leads to the following conclusion:

- Nonessential behavior that in some way contributes to the use case goal must always be modeled as an option flow and never as an extension use case.

- Nonessential behavior that doesn’t contribute to the use case goal in any way may be modeled as an extension use case (when all three questions are answered with True) or as an option flow (when the third question is answered with False).

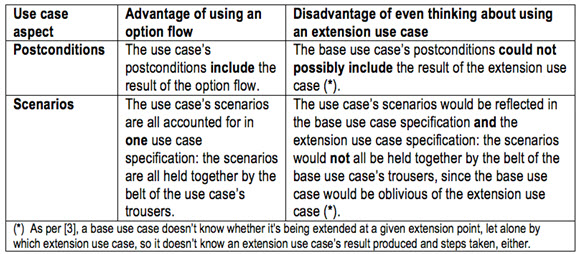

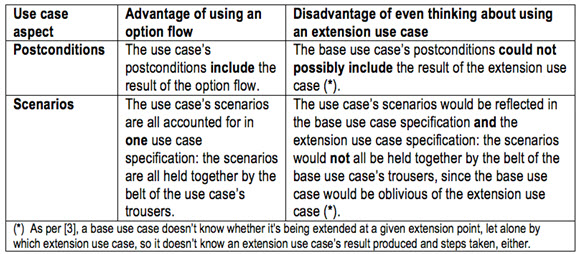

Other differences between the option flow and the extension use case

Key to the above conclusion is whether nonessential behavior contributes in some way to a use case’s goal or not. When it does, using an option flow has undeniable advantages over even thinking about using an extension use case. These aren’t considered in [3] but do reinforce the above conclusion, which is based on [3], as shown in the following table.

Thus, the extension use case turns out to be an inappropriate choice for modeling nonessential goal-related behavior; in contrast, the option flow is ideal for this.

Benefits of using the option flow

Ease of producing use case models:

- Only need to model one use case for a given goal, not two or more (prevents “use case bloat”).

- No need to use the special extension use case construct, its involved UML diagramming convention and a custom writing convention; in contrast, writing an option flow and its branch and rejoin points follows the convention used for all additional flows.

Ease of consuming use case models:

- It’s easier to consume one use case for a given goal than two or more (most consumers will agree with this).

- A use case’s postconditions and scenarios are all in one place (great for all consumers, but especially for testers).

In conclusion

It is my hope that this article will contribute to crisp, clear and consistent use case models (including diagrams and specifications), and will benefit their producers and consumers, as well as the organizational benefit of their employers.

References

[1] Alistair Cockburn, Writing Effective Use Cases, 12th Printing, November 2004.

[2] Object Management Group (OMG), OMG Unified Modeling LanguageTM (OMG UML), Superstructure, Version 2.4.1, Section 16.3.3, Description.

[3] Willem Van Galen, Excavating the extension use case, 10 July 2012, (Part 1) and 24 July 2012, (Part 2).

Don’t forget to leave your comments below.

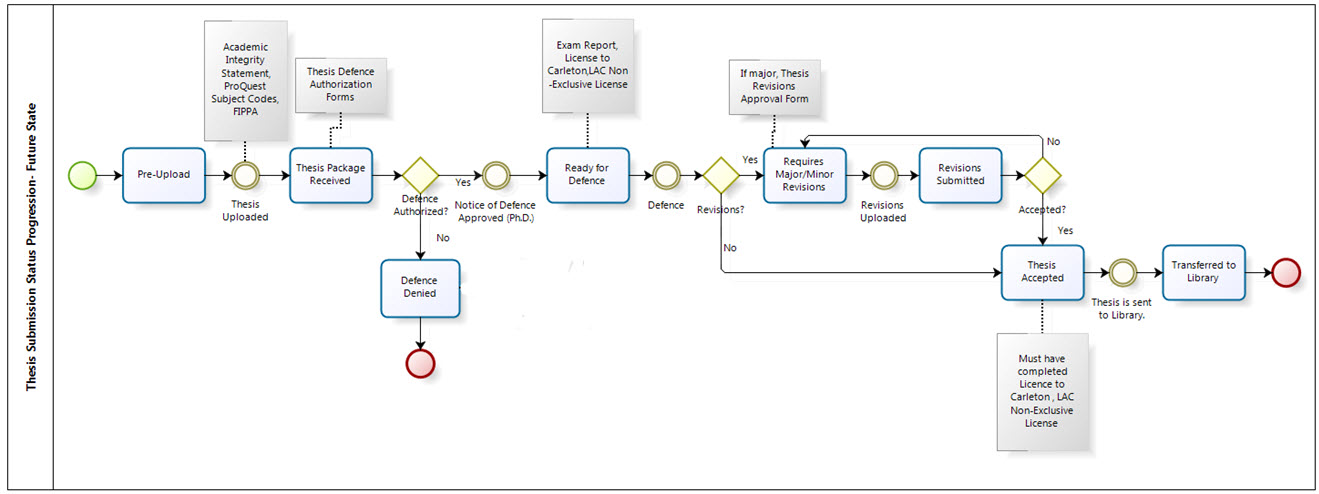

To give you some background about the project that the State Transition Diagram presented below is based on, the submission of Master’s and Ph.D. theses in my institution is not overly complicated, but there are many different forms that must be completed by a variety of people, including students, administrative staff, and faculty. I needed an easier way of explaining all the steps of the process and what forms were being released at each step. Enter the State Transition Diagram.

To give you some background about the project that the State Transition Diagram presented below is based on, the submission of Master’s and Ph.D. theses in my institution is not overly complicated, but there are many different forms that must be completed by a variety of people, including students, administrative staff, and faculty. I needed an easier way of explaining all the steps of the process and what forms were being released at each step. Enter the State Transition Diagram.

Introduction

Introduction